Intel Unveils Sapphire Rapids: Next-Generation Server CPUs

With Xeons based on Ice Lake shipping in volume, Intel turned its attention to their next-generation data center CPU – Sapphire Rapids. Today, at Intel Architecture Day 2021, the company unveiled the details of those processors.

This article is part of a series of articles covering Intel’s 2021 Architecture Day:

- Intel’s Gracemont Small Core Eclipses Last-Gen Big Core Performance

- Intel Details Golden Cove For Next-Generation Client and Server CPUs

- Intel Unveils Alder Lake: Next-Generation Mainstream Heterogeneous Multi-Core SoC

- Intel Unveils Sapphire Rapids: Next-Generation Server CPUs

- Intel Introduces Thread Director For Heterogeneous Multi-Core Workload Scheduling

- Intel’s Mount Evans: Intel’s First ASIC DPU

- Intel Unveils Xe HPG – Discrete Graphics For Gamers

- Intel Unveils Xe HPC And Ponte Vecchio

Package – A Tiled Server Chip

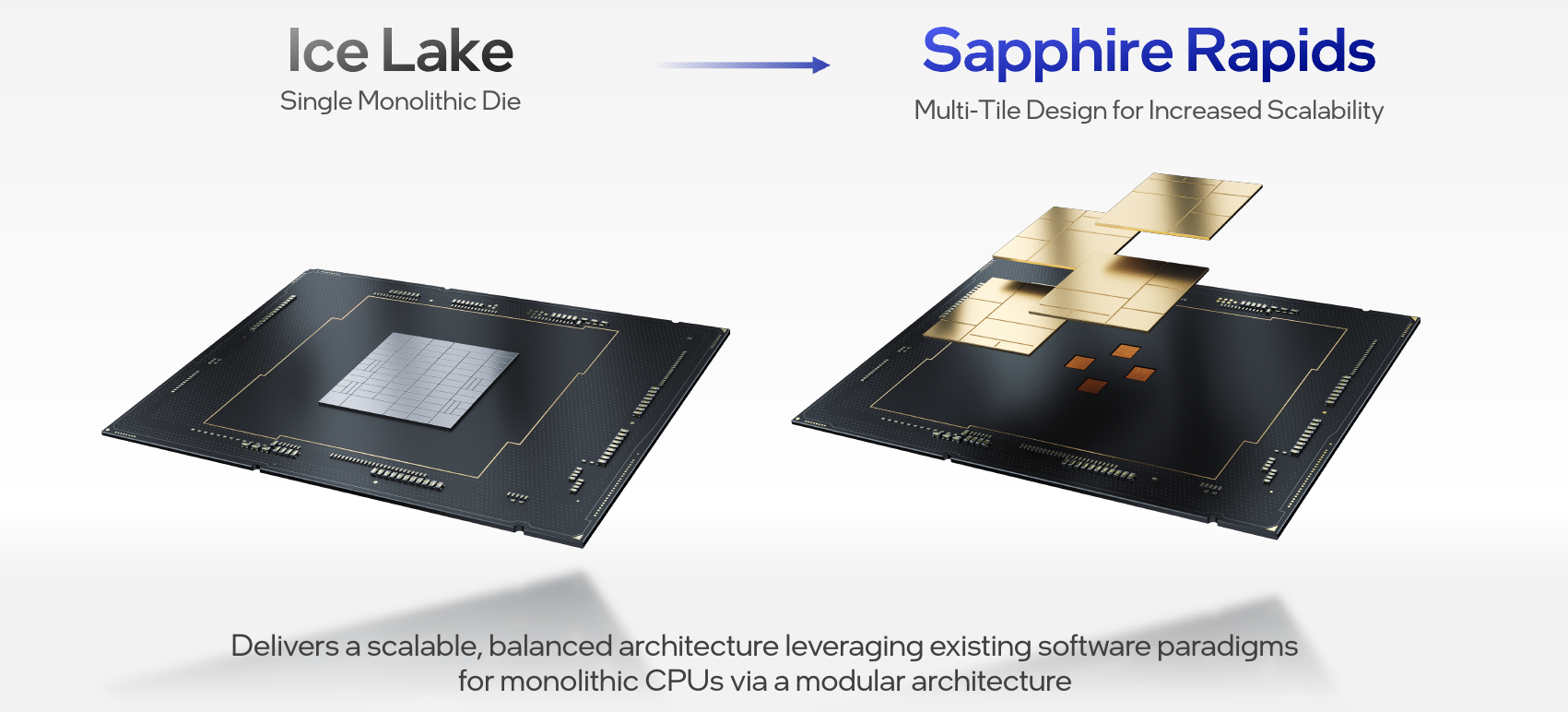

To scale their next-generation Xeon, codenamed Sapphire Rapids, to more cores without sacrificing performance, Intel had to split up their design into multiple dies. This was done out of necessity and not by choice due to a design that would’ve far exceeded the reticle size. But too many small dies also hurt performance. The middle ground Intel ended up going with is incredibly large chiplets stitched together.

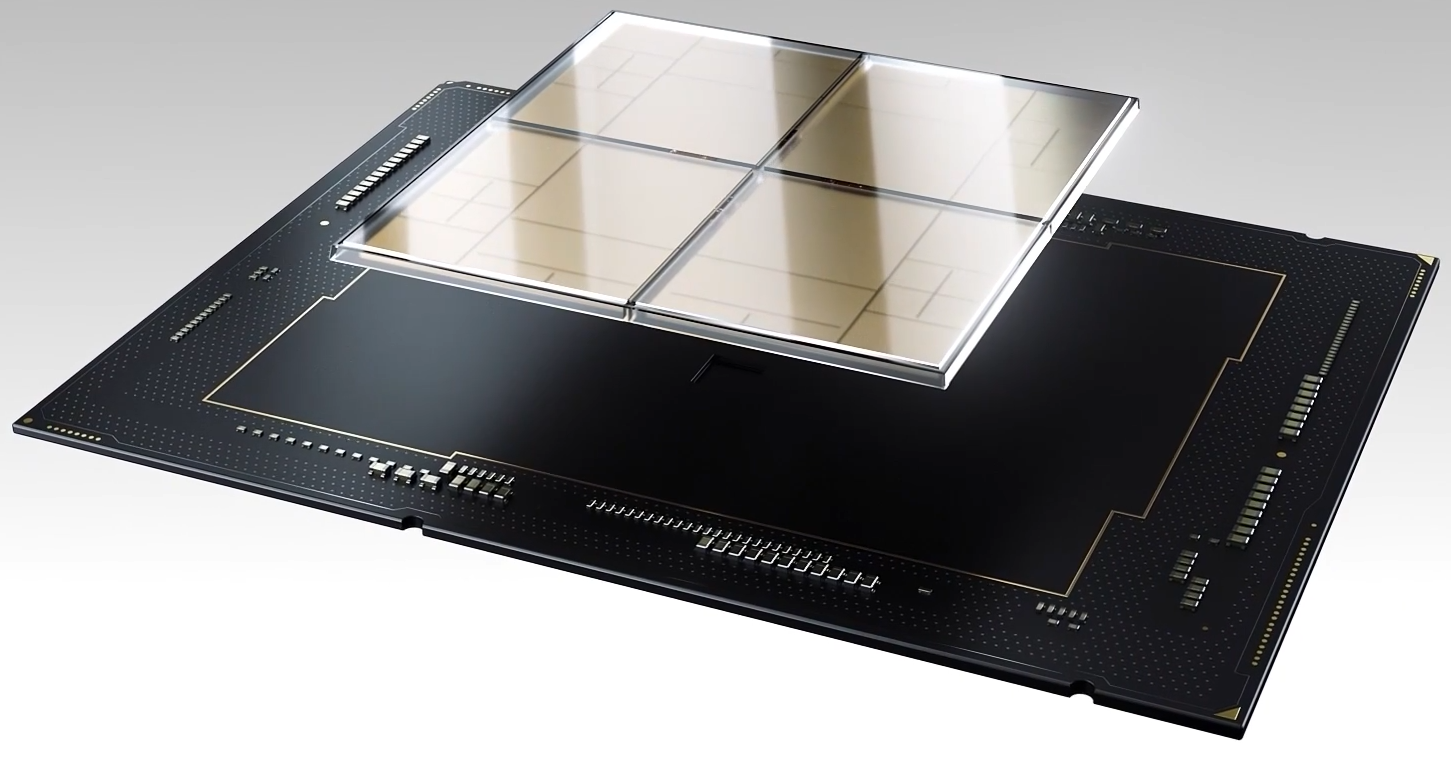

For Sapphire Rapids, the company is using its EMIB silicon bridge technology to package four Sapphire Rapids dies together, forming a nearly monolithic-like processor. “Sapphire Rapids is the first Xeon processor built using EMIB – our latest 55-micron bump pitch silicon bridge technology. This innovative new technology enables independent dies to be integrated together in a package to realize a single logical processor. The resulting performance, power, and density are comparable to a monolithic die,” said Nevine Nassif, Sapphire Rapids lead designer.

The actual chip is shown below.

Feels Like A Single Chip

One of the major design goals of Sapphire Rapids was to be able to use chiplets (or Tiles) and still make the entire hardware appear like a single monolithic die. To that end, Intel says that every thread on every core on every tile has full access to all the resources on all the tiles – including the cache, memory, and I/O. Intel says that Sapphire Rapids provides consistent low latency and high cross-sectional bandwidth across the entire SoC and cross tiles.

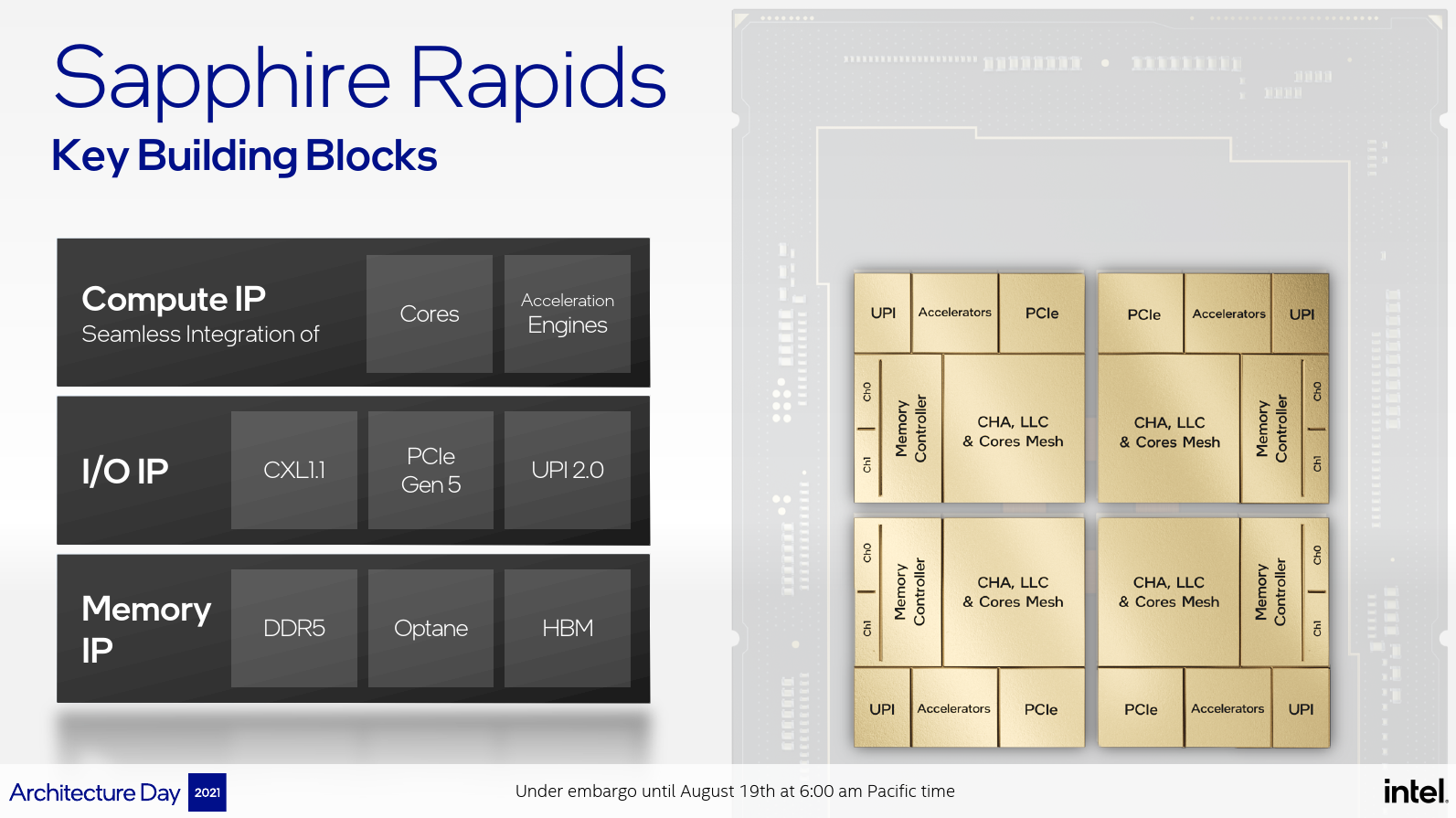

The chip integrates four dies. There are actually 2 different dies (i.e., two mask sets) one pair of dies is a mirror of the other pair. Because today is just an architectural reveal and not a product launch, the actual core count per die, frequencies, and other similar specs are not being disclosed. At a high level, Sapphire Rapids will be the first Xeon-based processor to introduce DDR5 memory support, next-generation Optane, and HBM support. Additionally, on the I/O connectivity side, Sapphire Rapids will introduce PCIe Gen5 support along with CXL 1.1 support and UPI 2.0 with high data rates in multi-socket environments.

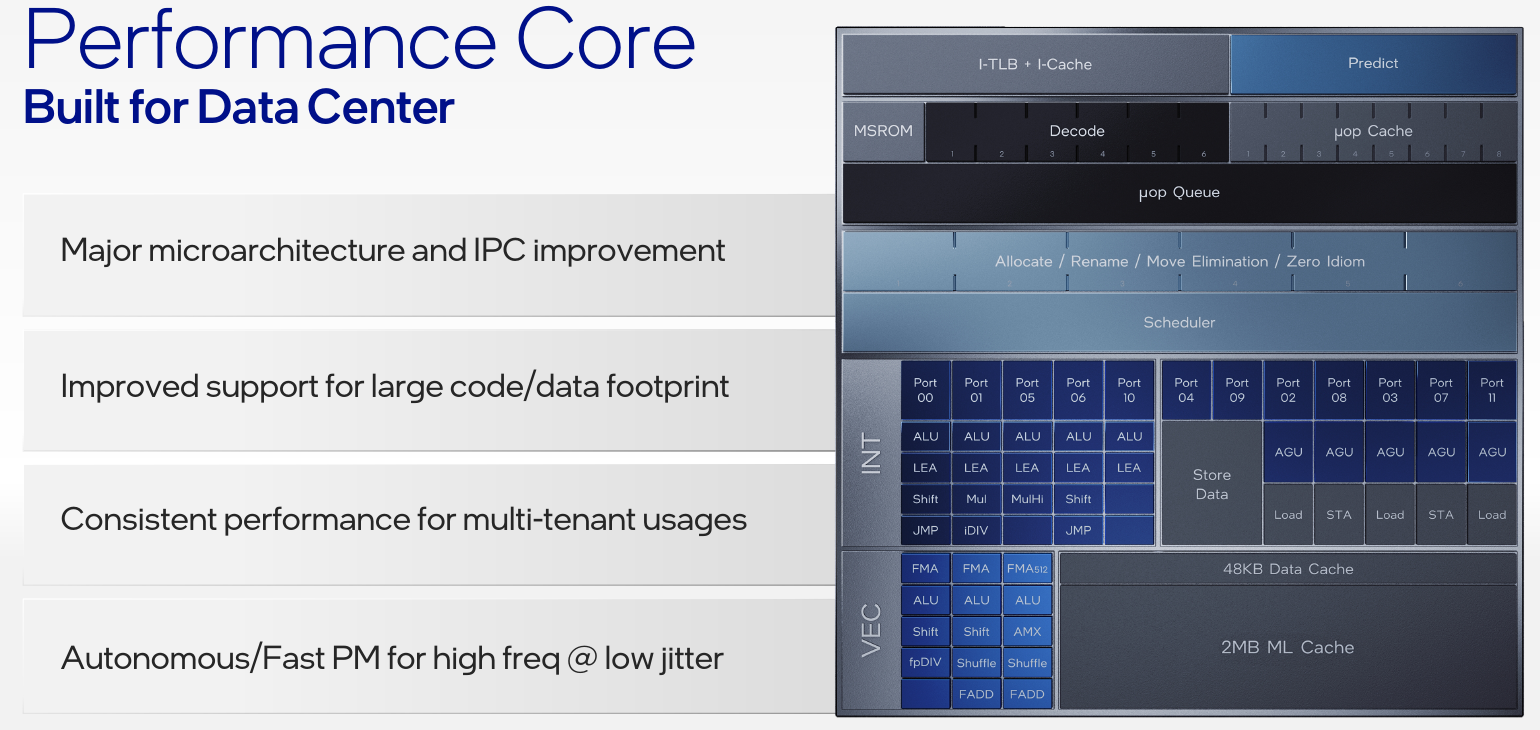

Golden Cove, with Data Center Extras

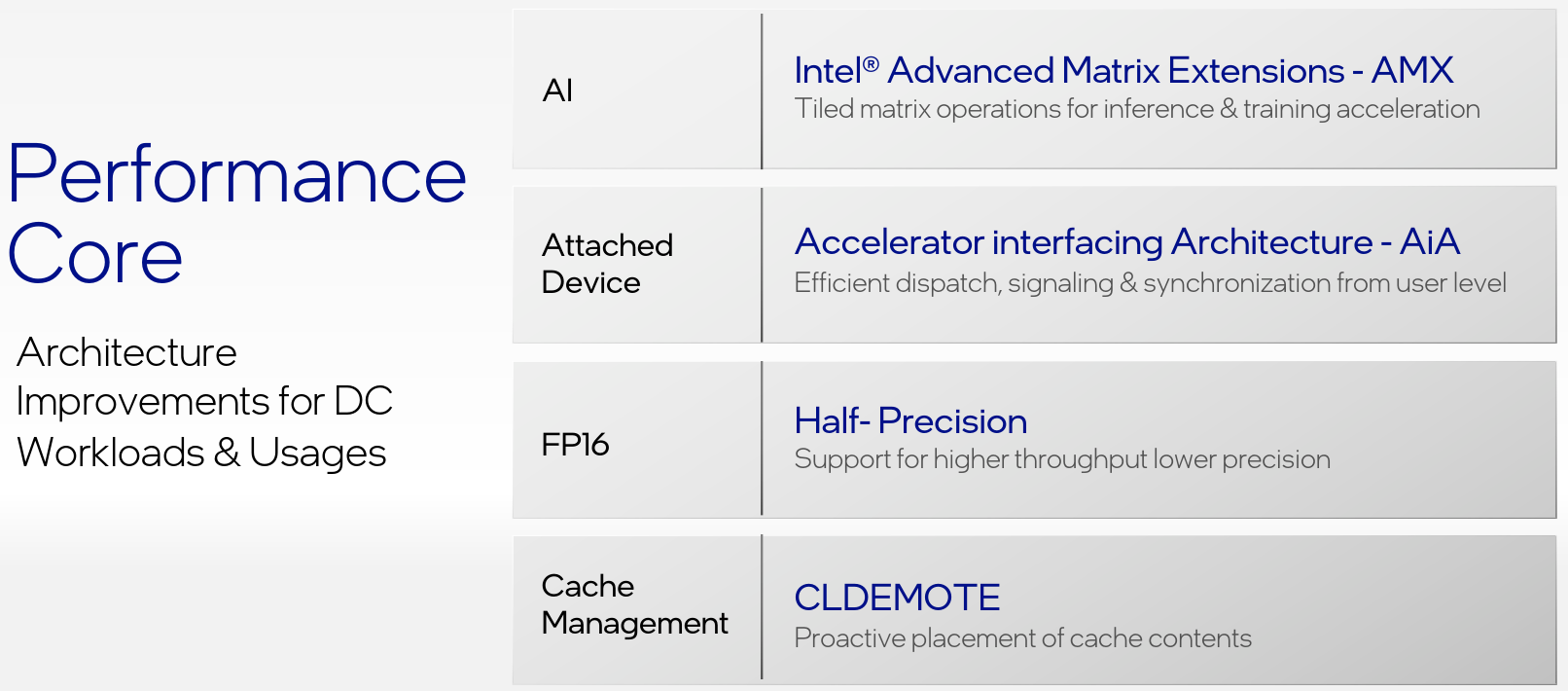

Sapphire Rapids integrates the new Golden Cove cores. Those cores were also detailed today at Architecture Day. You can find the details here. Beyond the common core features, the

Sapphire Rapids version of Golden Cove incorporates a set of features not found in the client version. This includes the new Advanced Matrix Extension, half-precision floating-point vector (AVX-512)2 support, new cache management, and attached device extensions.

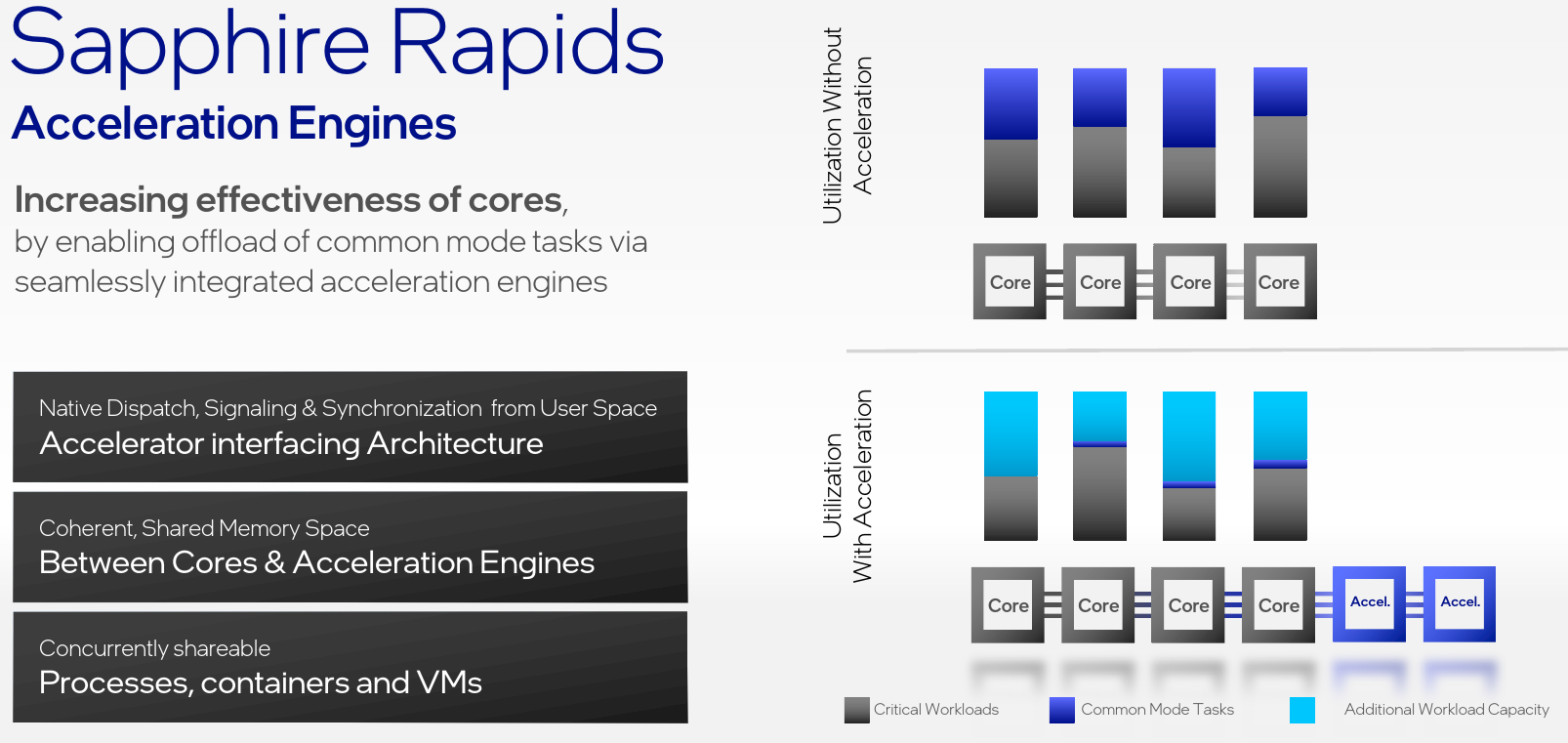

Acceleration Engines

One of the new features of Sapphire Rapids is the introduction of acceleration engines that can be used to offload intensive common tasks and reduce the utilization of the cores, freeing them for more complex workloads. There are a couple of new accelerators on Sapphire Rapids.

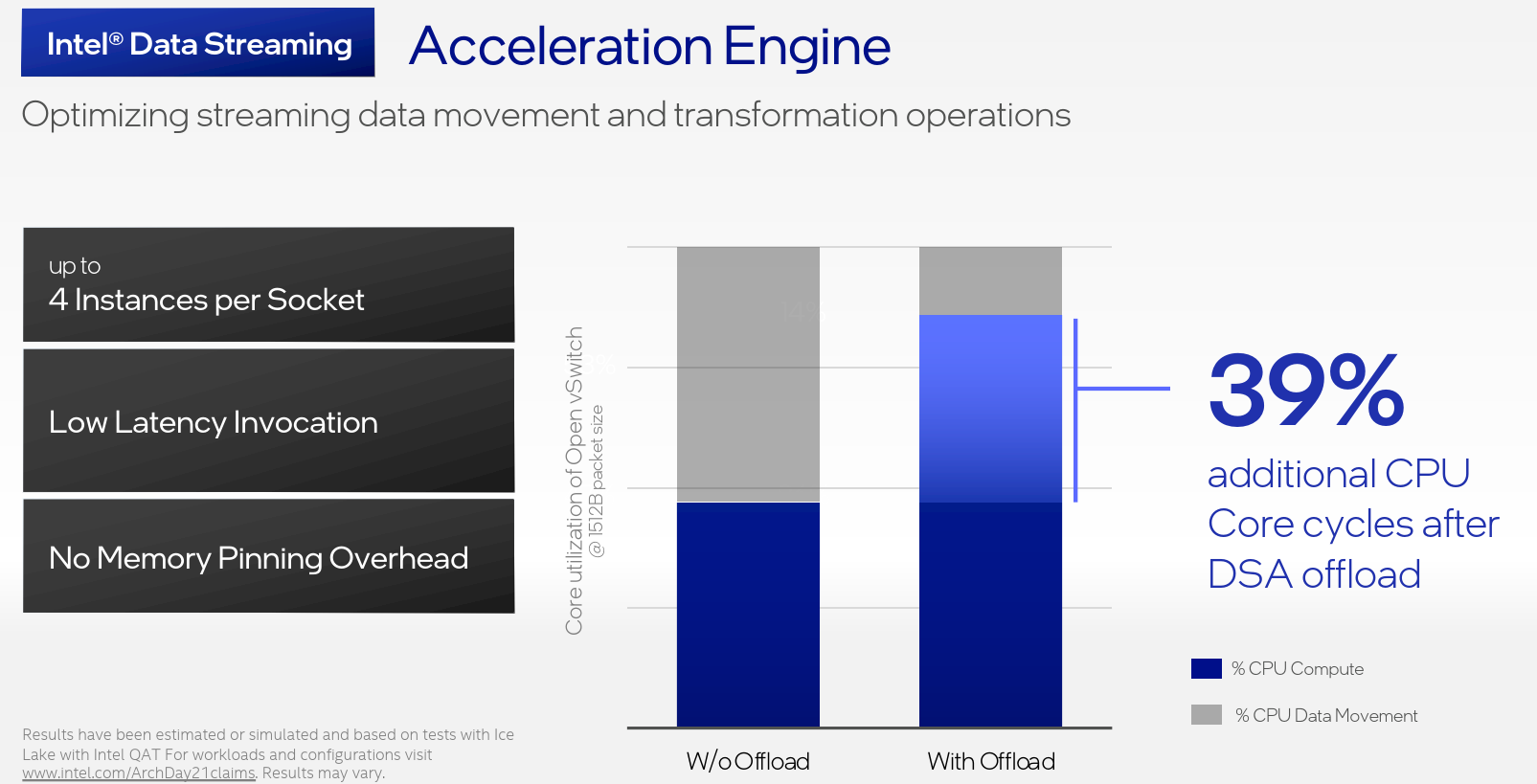

The first integrated accelerator Intel disclosed is the Data Streaming Acceleration (DSA) Engine to optimize data streaming operations. The DSA is capable of moving data between CPU caches, DDR memory, and other I/O attached devices. Workloads such as packet processing, data reduction, and fast checkpointing for virtual machine migration can take advantage of the data streaming accelerator to free up CPU cycles. In a demonstration from Intel, Sapphire Rapids running Open vSwitch, around 40% reduction in CPU utilization can be observed when offloading the work to up to 4 DSA instances on the chip. This was done while achieving around 2.5x the performance. In other words, in this specific demonstration, the result is similar to doubling the number of cores.

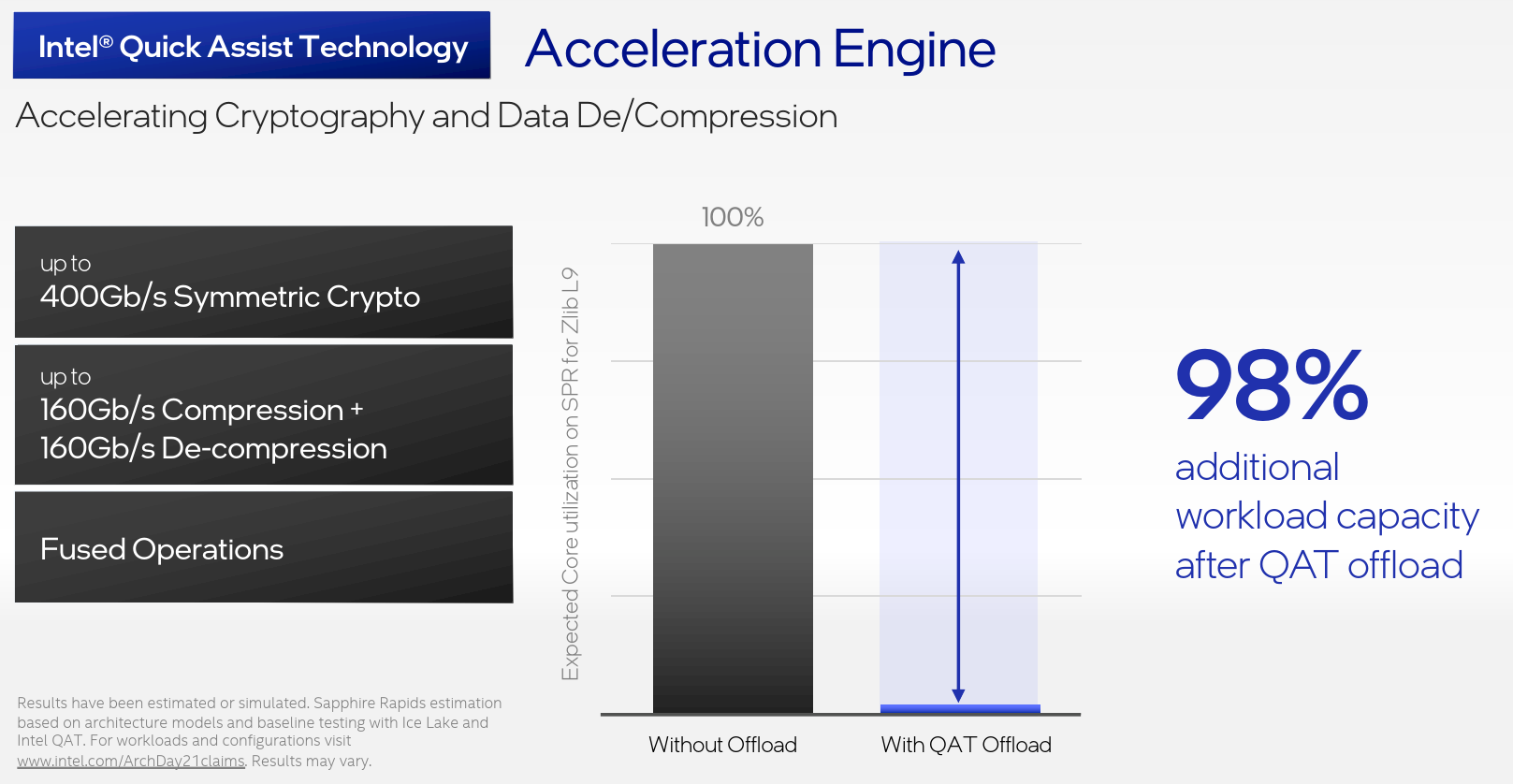

Another accelerator that Intel integrated into Sapphire Rapids is the Quick Assist Technology (QAT) which was previously found in their chipsets. Sapphire Rapids will feature next-generation QAT that will support all the most common crypto, hash, and compression algorithms. QAT can also fuse or chain together those operations. The QAT on Sapphire Rapids is said to support up to 400 Gb/s of crypto and simultaneously do compression or decompression at up to 160 Gb/s each.

PCIe Gen5 & Faster UPI 2.0 Links

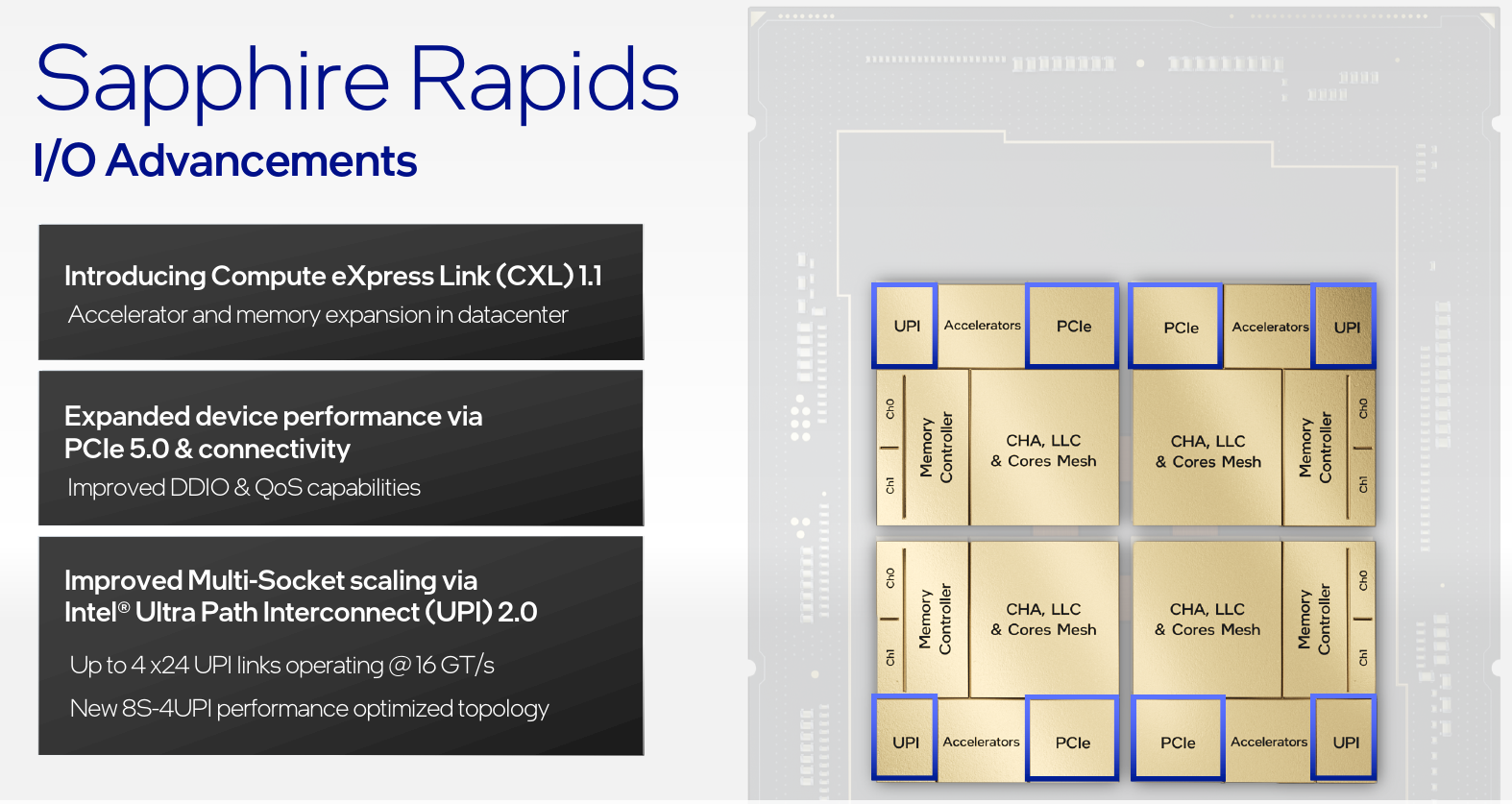

Sapphire Rapids upgrades the I/O support to PCIe Gen5. Additionally, Intel also upgraded the chip-to-chip UPI links to UPI 2.0 which essentially increases the link transfer rate to 16 Gt/s. Sapphire Rapids will support up to 4 x24 UPI 2.0 links, supporting new 8-socket 4-UPI configurations.

DDR5 Memory And HBM

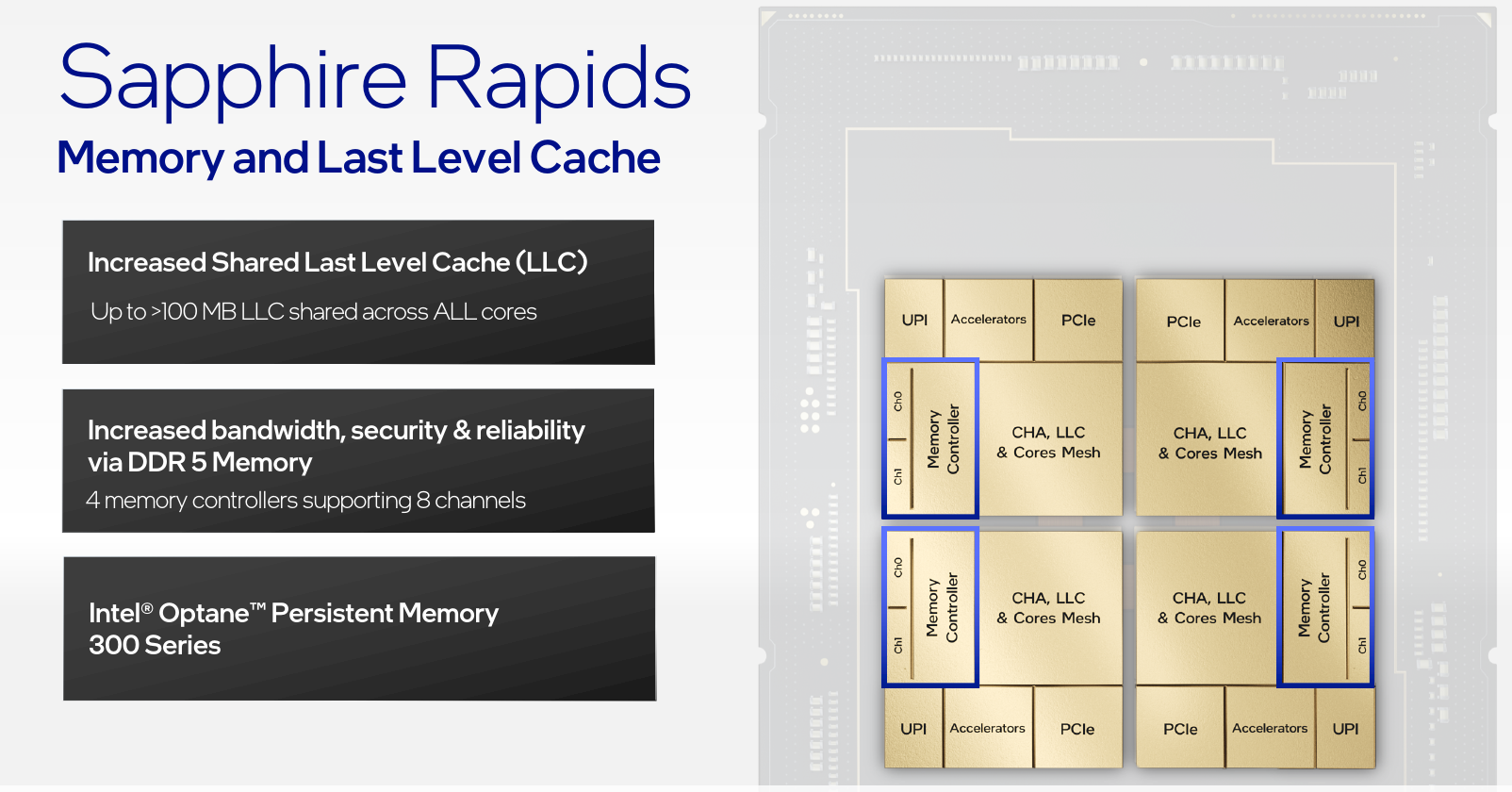

Across all cores on Sapphire Rapids, Intel says that the new processor will feature up to over 100 MiB of shared last-level cache. Each tile comprises a single memory controller supporting two memory channels. With four tiles in each Sapphire Rapid processor, the entire chip offers 4 memory controllers supporting up to 8 memory channels. Sapphire Rapids memory controllers have been upgraded to support DDR5 memory and support for next-generation Intel Optane memory.

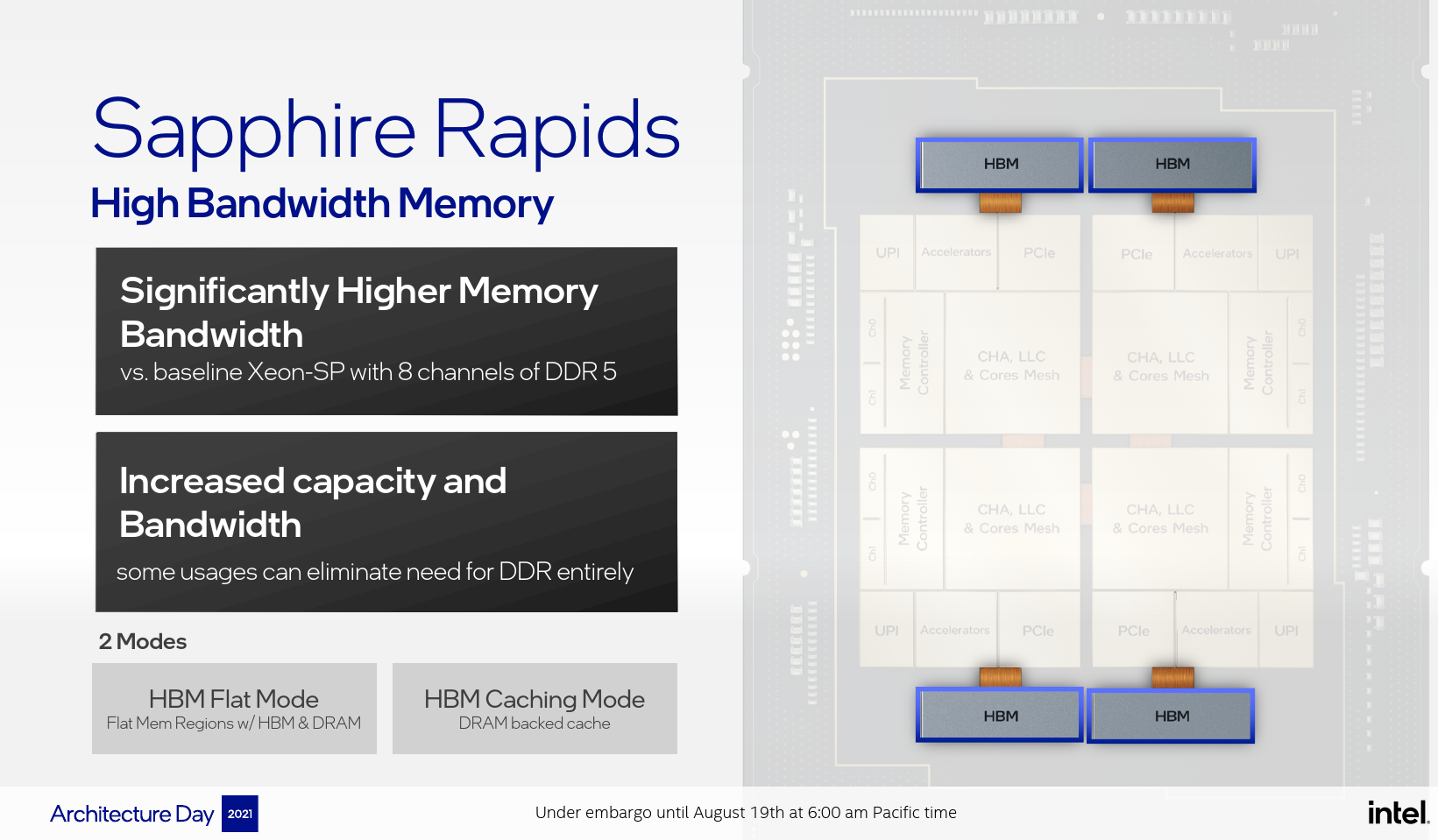

In addition to the DDR5 and Optane memory support, Sapphire Rapids will come in various SKUs that also incorporate high-bandwidth memory (HBM). The HBM version of Sapphire Rapids is particularly suitable for HPC applications, machine learning, in-memory data analytics, and other heavily memory-bound applications. It’s worth pointing out that Sapphire Rapids HBM will come with two configurable modes – memory tiering (caching mode) which offers software-visible HBM + DDR memory as well as software-transparent caching between HBM and DDR.

–

Spotted an error? Help us fix it! Simply select the problematic text and press Ctrl+Enter to notify us.

–