Centaur Unveils Its New Server-Class x86 Core: CNS; Adds AVX-512

Unified Scheduler

Centaur employs a relatively wide unified scheduler design. CNS has ten dedicated execution ports – they are physically partitioned into three groups: integer, floating-point (and AVX), and memory. In terms of scheduler width, this is rivaling both Intel and AMD recent designs, albeit as you’ll see the organization is more similar to AMD’s design.

| Scheduler Comparison | |||||

|---|---|---|---|---|---|

| Company | Centaur | AMD | Intel | AMD | Intel |

| uArch | CNS | Zen | Haswell | Zen 2 | Sunny Cove |

| Scheduler | unified | split | unified | split | unified |

| Scheduler | 64 entries | 84 [6×14] + 96 | 97 | 64 [4×16] + 28 + 36 | >97 |

| Issue | 10/cycle | 10/cycle | 8/cycle | 11/cycle | 10/cycle |

The two areas we will focus on in this article are the memory subsystem and the floating-point as those two areas received the majority of improvements.

Floating Point & Vector – Supporting AVX-512

The CNS core features three dedicated ports for floating-point and vector operations. Two of the ports support FMA operations while the third has the divide and crypto units. All three pipes are 256-bit wide which means you are looking at a design with very similar throughput to AMD’s Zen 2 core. In terms of raw compute power, the total FLOPS per core is 16 double-precision FLOPs/cycle – reaching parity with Zen 2, Intel Haswell, Broadwell, and all Intel mainstream client processors.

| FLOPS | |||||

|---|---|---|---|---|---|

| Company | AMD | Centaur | AMD | Intel | Intel |

| uArch | Zen | CNS | Zen 2 | Coffee Lake | Cascade Lake |

| Performance (DP) | 8 FLOPs/clk | 16 FLOPs/clk | 16 FLOPs/clk | 16 FLOPs/clk | 32 FLOPs/clk |

AVX-512

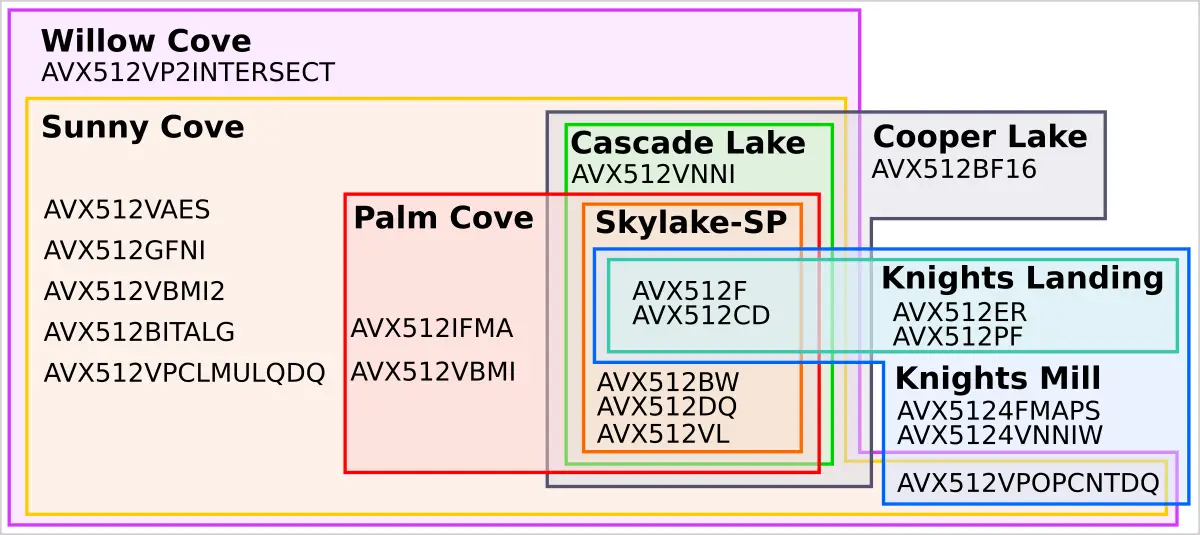

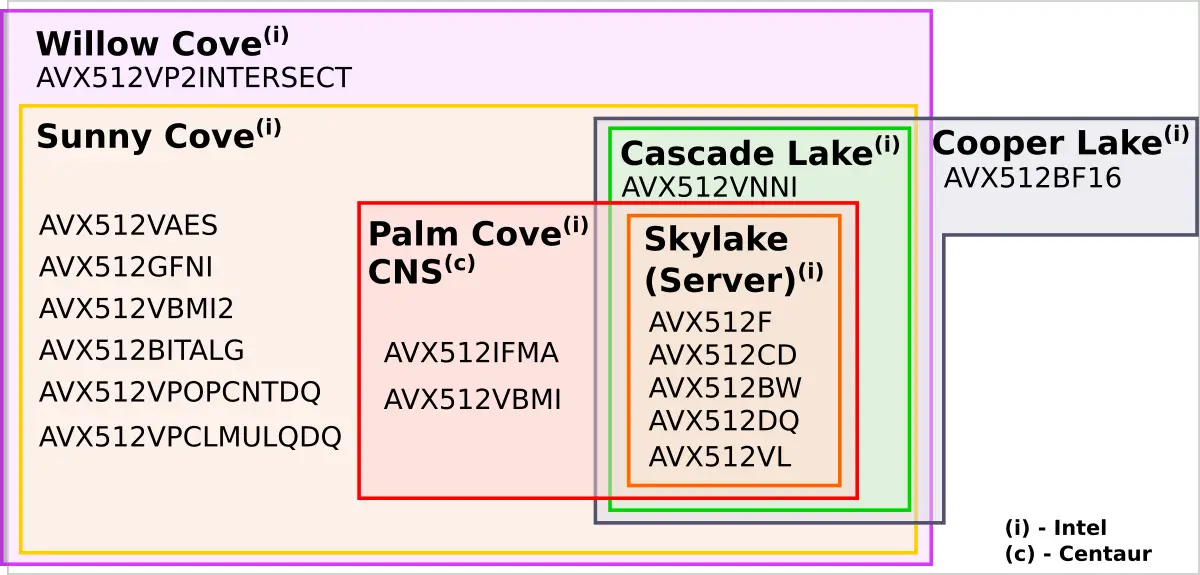

A big differentiation from Zen 2 is in the x86 ISA support level. Leveraging all the existing x86 software ecosystem, Centaur made great strides in reaching ISA parity with Intel. Centaur’s new CNS core supports AVX-512. But AVX-512 is not a monolithic ISA extension. It is a collective name for a whole collection of tightly-related sub-extensions. Support for the different sub-extensions varies wildly between Intel cores. The Euler diagram below highlights the ISA support level among the different Intel microarchitectures.

Centaur’s CNS not only catch up with Skylake (server) parts in terms of ISA support, it also adopts the extensions found in Palm Cove (the core inside Cannon Lake). The caveat here is that since Intel had no real shipping 10nm parts until recently, Centaurs implementation follows the specs and programming model for the AVX-512 IFMA and VBMI but sometimes Intel’s actual implementation behavior slightly deviates. It remains to be seen if compatibility is 100% for those two extensions. Centaur already has plans for supporting additional AVX-512 sub-extensions in a future core.

From an implementation point of view, Centaur’s CNS cores Vector lanes are 256-wide, therefore AVX-512 operations are cracked into two 256-wide operations which are then scheduled independently. In other words, there is no throughput advantage here. This is very similar to how AMD dealt with AVX-256 in the Zen core where operations had to be executed as two 128-bit wide operations. Nonetheless, support for AVX-512 is very welcoming.

Memory Subsystem

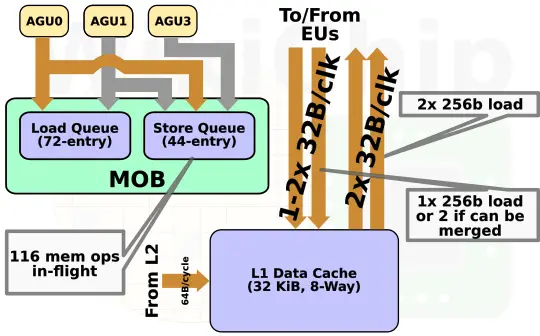

On the memory subsystem side, the CNS features three ports – two generic AGUs and one store AGU port. The new core supports 116 memory operations in-flight. The MOB consists of a 72-entry load buffer and a 44-entry store buffer. The numbers are identical to AMD Zen and slightly less than Skylake.

| Memory Order Buffer | |||||

|---|---|---|---|---|---|

| Company | Centaur | AMD | Intel | Intel | |

| uArch | CNS | Zen | Skylake | Sunny Cove | |

| Load Buffer | 72 entries | 72 entries | 72 entries | 128 entries | |

| Store Buffer | 44 entries | 44 entries | 56 entries | 72 entries | |

The data cache on CNS is 32 KiB. It is multi-ported, supporting 2 reads, and 1 write every cycle. Each port is 32B wide, therefore 512-bit memory operations, like the arithmetic counterparts, have to be cracked into two 256-bit operations. Still, Centaur’s CNS can do a single 512-bit operation each cycle.

No Downclocking

Centaur went all-in on ISA support and the result is that software developers now have a second vendor that can deliver support for AVX-512 – beating AMD. The wider vector pipes mean it has an advantage in raw floating-point and 256-bit operations throughput – comparable to Zen 2 and Skylake. On paper, in terms of peak FMA operations, Centaur remains at half the throughput of Intel. In reality, Intel’s low-end SKUs (i.e., Octa-core Xeon Silver) only have a single FMA unit enabled, therefore throughput should be comparable in practice on those SKUs (in other operations, Skylake still wins with two AVX-512 units). And then there is power. Intel’s design forces the core to heavily throttle on AVX-256 heavy operation and to throttle even further on heavy AVX-512 operations – sometimes to less than half of the base frequency. This gets even worse as the number of cores doing heavy vector operations increases. Centaur took a more balanced approach. Although they do have a mechanism to cap the power and they could use it downclock the cores on power-sensitive SKUs. Centaur told WikiChip the SoC was designed such that it would generally sustain all operations at the nominal operating frequency. In other words, they designed the chip to normally not downclock. Centaur’s current reference platform already does this at a sustained 2.5 GHz for all the cores (including the entire NCORE neural processor).

Putting It All Together

Centaur is back, and it is back with a fairly powerful core. Since this is a technology reveal and not a product announcement, Centaur is still withholding performance numbers, power ranges, and frequencies. Those will come next year as the company gets closer to productization. At a high level, the core exceeds the capabilities of Haswell but doesn’t quite get to Skylake. In many ways, it’s very comparable to AMD’s original Zen core but one-ups it in a number of areas. Centaur isn’t stopping here, the company has a focused roadmap to improve this core further. All in all, while they won’t be the king of performance, they are going to offer respectable cores at a good value. No matter how you slice it, exciting times are coming to the data center with all three x86 vendors fighting for a piece of a growing market.

In order to see Centaur’s full value proposition we need need to take a step back and consider the full product. In a follow-up article WikiChip will take a look at the rest of the SoC and the powerful integrated AI neural processor Centaur added to the chip.

–

Spotted an error? Help us fix it! Simply select the problematic text and press Ctrl+Enter to notify us.

–