Intel silently launches Knights Mill

Earlier today Intel quietly launched three new Xeon Phi SKUs based on the Knights Mill microarchitecture.

SKUs

| Knights Mill SKUs | ||||

|---|---|---|---|---|

| Part | Cores | Threads | TDP | Frequency |

| 7235 | 64 | 256 | 250 W | 1.3-1.4 GHz |

| 7285 | 68 | 272 | 250 W | 1.3-1.4 GHz |

| 7295 | 72 | 288 | 320 W | 1.5-1.6 GHz |

All three parts have 36 PCIe lanes Gen 3.0 as well as 16 GiB of high-bandwidth Multi-Channel DRAM (MCDRAM). Additionally, those parts also support up to 384 GiB of hexa-channel DDR4 memory.

Knights Mill

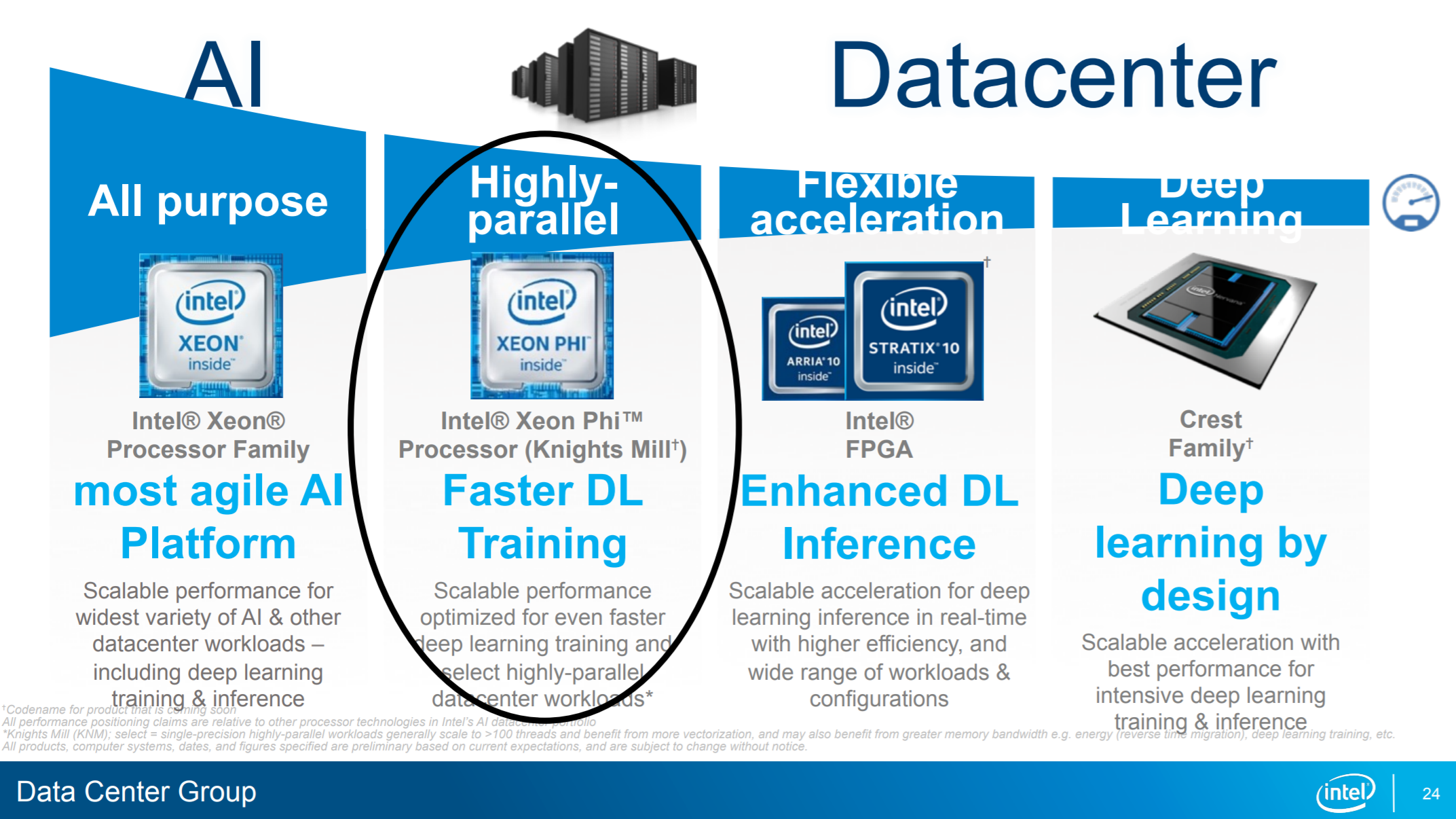

Intel unveiled Knights Mill back at Hot Chips in August. Knights Mill are a special derivative of Knights Landing that is targeted specifically at Deep Learning training workloads.

As you might imagine the system architecture is almost identical. What has changed is pipeline implementation:

| Differences Between KNL vs KNM | |||

|---|---|---|---|

| Arch | Single Precision | Double Precision | Variable Precision |

| Knights Landing | 2 Ports x 1 x 32 (64 FLOPs/cycle) |

2 Ports x 1 x 16 (32 FLOPs/cycle) |

N/A |

| Knights Mill | 2 Ports x 2 x 32 (128 FLOPs/cycle) |

1 Port x 1 x 16 (16 FLOPS/cycle) |

2 Ports x 2 x 64 (256 OPS/cycle) |

Those new operations are supported through the introduction of three new AVX-512 extensions:

- AVX5124FMAPS – Instructions add vector instructions for deep learning on floating-point single precision

- AVX5124VNNIW – Instructions add vector instructions for deep learning on enhanced word variable precision

- AVX512VPOPCNTDQ – Instructions add double and quad word population count instructions.

It’s interesting to note that Intel has no plans on actually integrating the first two extensions into their mainstream processors. Only the VPOPCNTDQ will make it into future Ice Lake server parts.

–

Spotted an error? Help us fix it! Simply select the problematic text and press Ctrl+Enter to notify us.

–