Cambricon Reaches for the Cloud With a Custom AI Accelerator, Talks 7nm IPs

Earlier this month Cambricon announced the company’s first AI accelerator for the data center in a bid to get a piece of the pie from a booming AI market; a market currently largely dominated by companies such as Nvidia and Intel. The startup has only been around since 2016 but has since managed to ship its IPs in millions of devices thanks in part to a large push from the Chinese government. Cambricon’s first IP, the 1A, has been shipping with HiSilicon’s Kirin 970 since late last year.

The new chip is called the MLU100 and is designed for data center workloads. Though we do not have architectural details yet, Cambricon told us that the MLU100 can be thought of as a scaled-up version of their mobile IPs – in both computational power and power consumption. The chip is a result of a multi-year development effort which includes a large set of changes and improvement over their mobile design.

In case you were wondering, MLU stands for Machine Learning Unit. The MLU100 supports Cambricon’s most recent architecture which is still the MLUv01. The chip is fabricated on TSMC’s 16nm process and operates at a base frequency of 1 GHz which they refer to as balanced mode. There is also a high-performance mode with a frequency of 1.3 GHz, although the performance efficiency is slightly worsened.

In balanced mode, the chip has a theoretical peak performance of 128 trillion fixed-point (8-bit int) operations per second while achieving 166.4 TOPS in high-performance mode. In terms of half-precision floating point (16-bit), the chip is capable of 64 TFLOPS at 1 GHz and 83.2 TFLOPS in high-performance mode. This is all done within an 80 (balance) and 110 (high-perf) Watts power envelope. Cambricon says that the chip does well with all popular machine learning algorithms through support from their NeuWare software platform which supports all of the popular development frameworks (TensorFlow, Caffe, MXnet, Android NN, etc..).

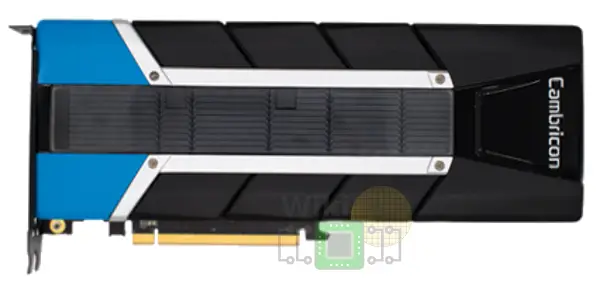

Accelerator Card

An accelerator card based on the MLU100 has also been announced. The x16 PCIe card comes in two variations – a 16 GB and a 32 GB of DDR4 – with a memory bandwidth of 102.4 GB/s. Cambricon has already partnered up with Lenovo to offer the cards as an optional add-on to their ThinkSystem SR650 servers. The SR650 comes with up to two Xeon Scalable CPUs with up to 56 cores and 3 TiB of memory as well as up to two MLU100 accelerator cards.

MLU200

In addition to the MLU100, Cambricon has plans to introduce a companion chip called the MLU200 which is intended for training in addition to inference. Although there is currently no more information about this chip, Cambricon plans to officially unveil the product by the end of this year.

7nm Mobile IP

In the same press conference, Cambricon announced their third-generation Cambricon-1M IP core implemented on TSMC’s 7nm process. The first generation IP, the Cambricon-1A, which launched in 2016 can be found in chips such as HiSilicon’s Kirin 970. The 1M is expected to debut in the Kirin 980 which is set to launch later this year. As with prior IPs, the Cambricon-1M comes in three flavors: 2 TOPs, 4 TOPs, and 8 TOPs with a reported efficiency of 5 TOPs/watt. Earlier this year ARM announced their own machine learning processor. ARM advertises around 3 TOPs/W efficiency for their IP for the 7nm implementation. Both numbers are quoted for 8-bit integer operations.

![]()

Derived WikiChip articles: MLU100.

–

Spotted an error? Help us fix it! Simply select the problematic text and press Ctrl+Enter to notify us.

–