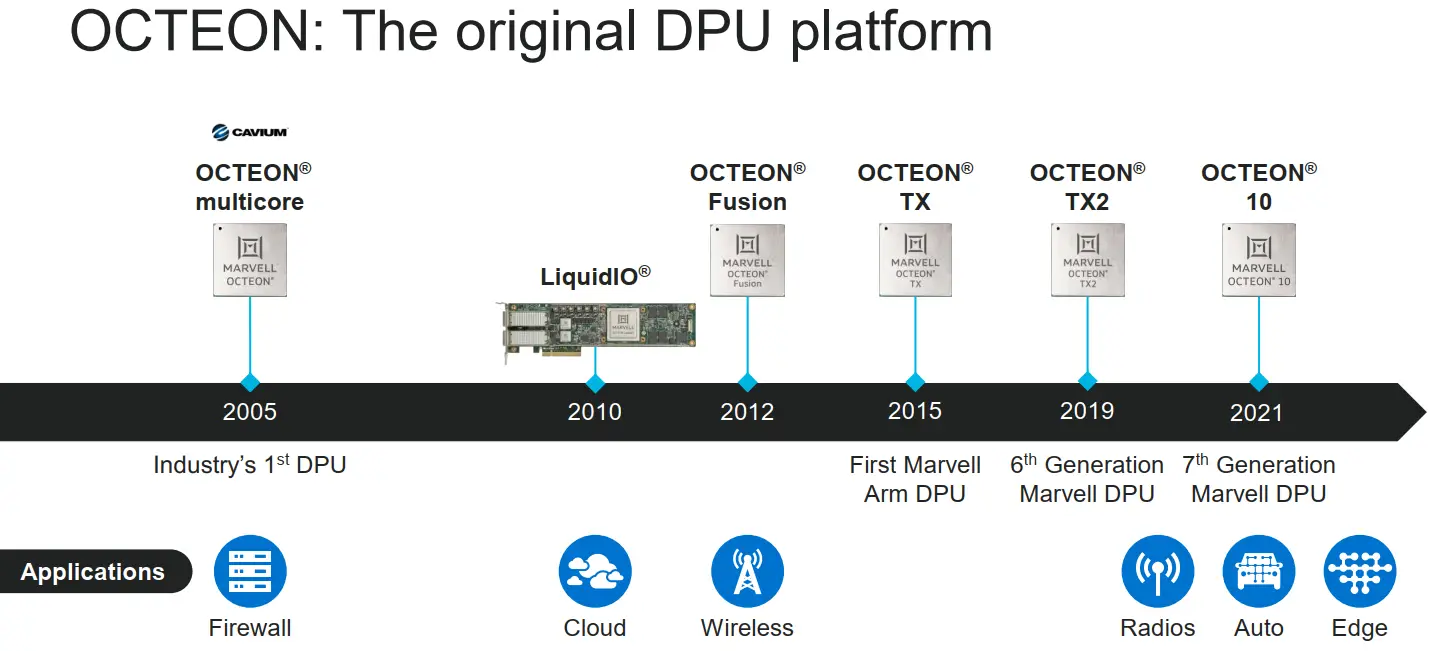

Marvell Launches 5nm Octeon 10 DPUs with Neoverse N2 cores, AI Acceleration

Over the past few months, Marvell has slowly been rolling out its entire networking portfolio onto TSMC’s latest 5-nanometer process in order to gain an edge in power efficiency. Today, Marvell is extending this to their DPUs with the launch of its new OCTEON 10 DPU series for edge through data-center applications.

Octeon 10

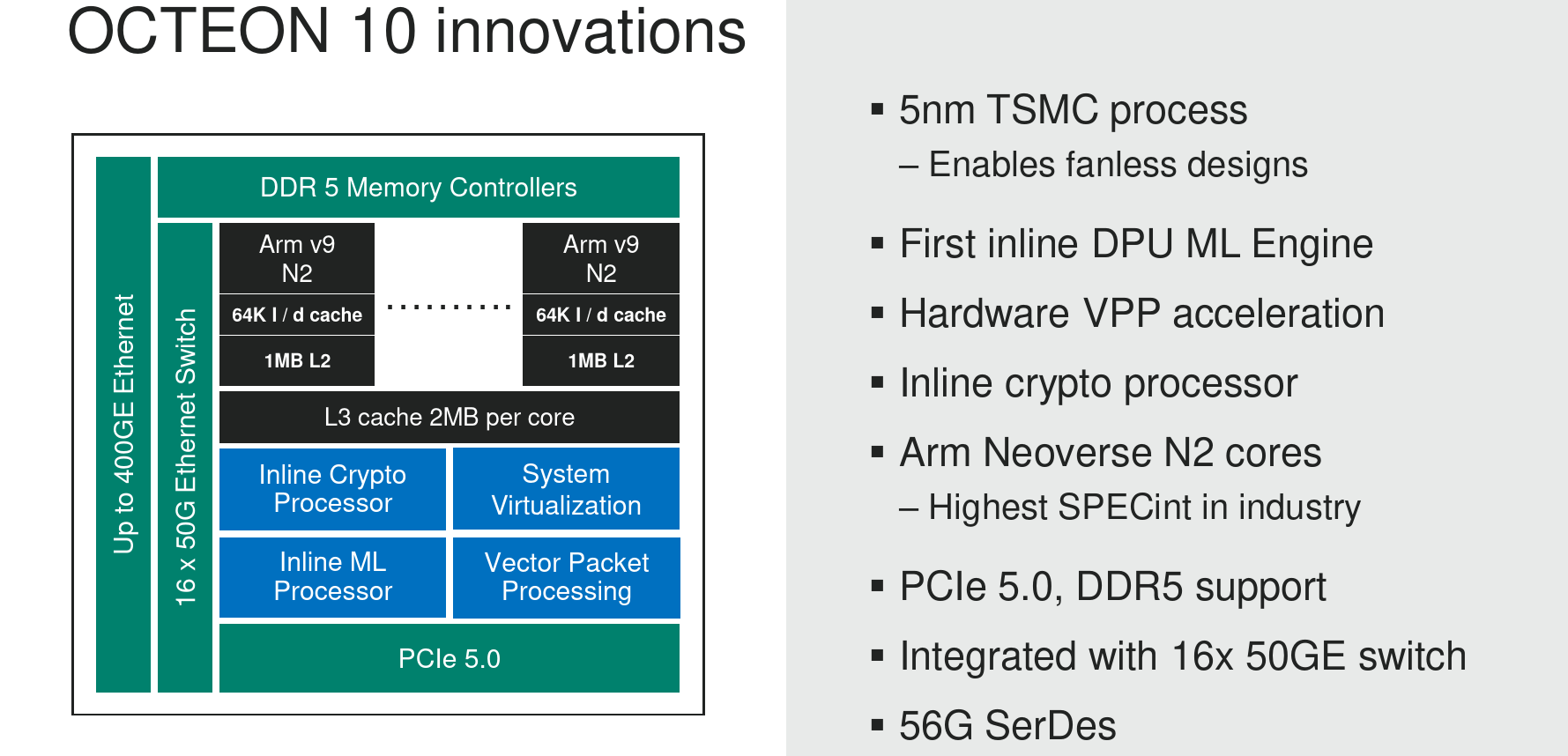

Marvell’s new Octeon 10 platform is designed to scale all the way from the fanless edge and 5G (e.g. base station) applications to enterprise and cloud vendors. The new Octeon 10 parts will go against competing chips such as Nvidia’s BlueField-2 and future BlueField-3 DPUs. Fabricated on TSMC’s 5 nm process, these chips feature the new Arm Neoverse N2 cores. Marvell previously developed its own CPU cores. With the new Octeon 10, they’ve gone back to licensing Arm’s standard infrastructure cores, focusing on custom features rather than putting the bulk of their effort on the CPU. It’s worth pointing out that this also makes Marvell’s new Octeon 10 the first Armv9 server chips on the market and it does come with SVE2 (2x128b vector units). On the Octeon 10, each N2 core features a private 64 KiB L1I/L1D cache along with 1 MiB of private L2 cache and an additional 2 MiB of L3 cache per core.

On the I/O side, the Octeon 10 supports all the new interfaces including DDR5 memory and PCIe Gen 5. As far as networking, they also support anywhere from 1G and all the way to 400 Gigabit Ethernet. As far as hardware accelerators go, Marvell integrated a new ML processor as well as a vector packet processor (“VPP”). They also enhanced the crypto processor.

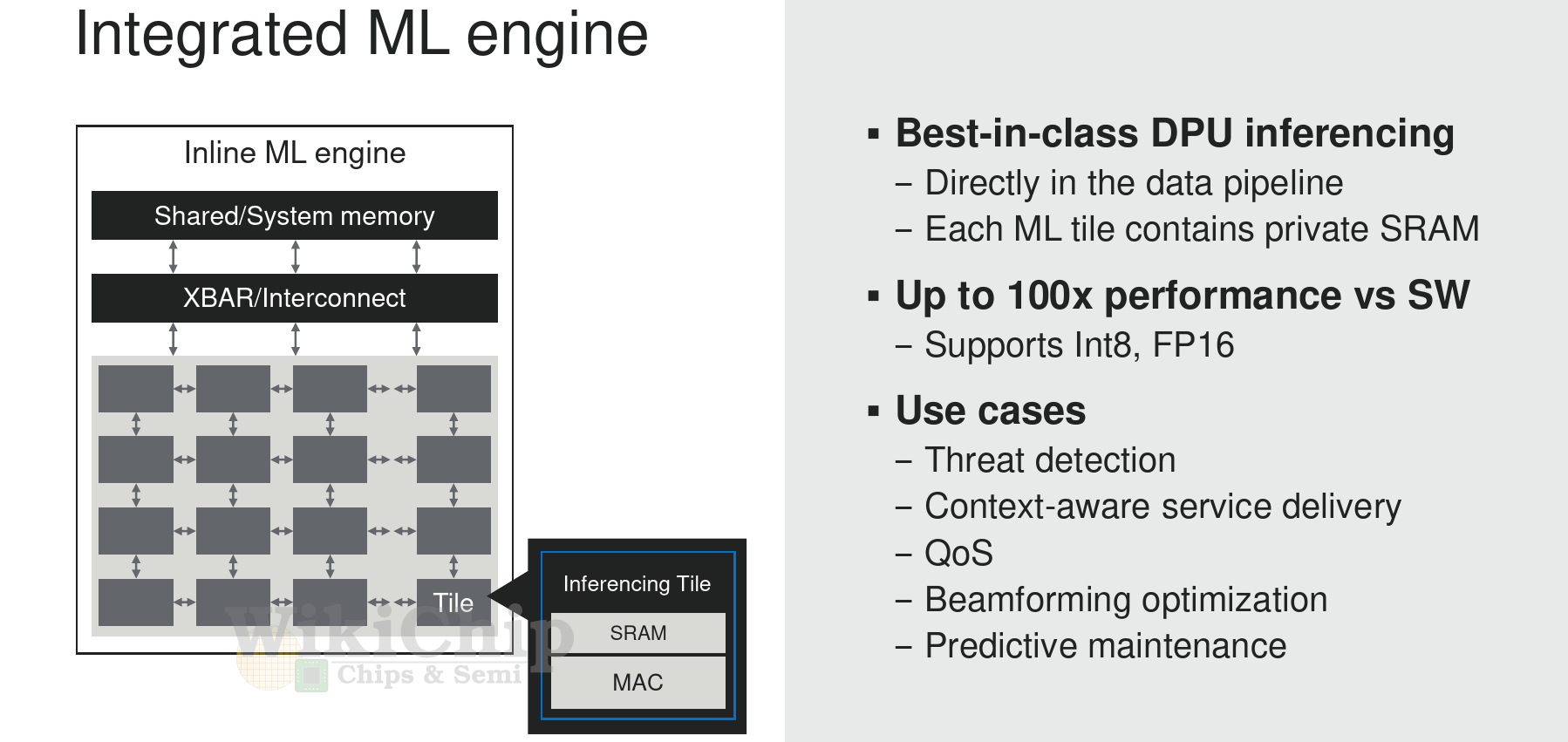

AI Acceleration

One of the new additions to the Octeon 10 is the AI accelerator. A few years ago Marvell designed a standard-alone AI inference accelerator they called the M1K for the data center. Although it never made it to production, this accelerator is now getting a new life on the Octeon 10. This allows applications to perform inference at very low latencies as they process the data. The ML engine is a tiled architecture integrating a varying number of Inferencing Tiles interconnected using a crossbar. Each tile incorporates a private SRAM block along with a dedicated multiply-accumulate block. Eight and sixteen-bit operations are supported. The total compute power of the ML engine is somewhere around 20 TOPS for the smaller devices and that can scale all the way to as much as 100s of TOPS on their large 400G devices. Marvell says that the performance the ML engine offers should be more than sufficient for the kind of use cases they see their customers using it for (e.g., malware detection).

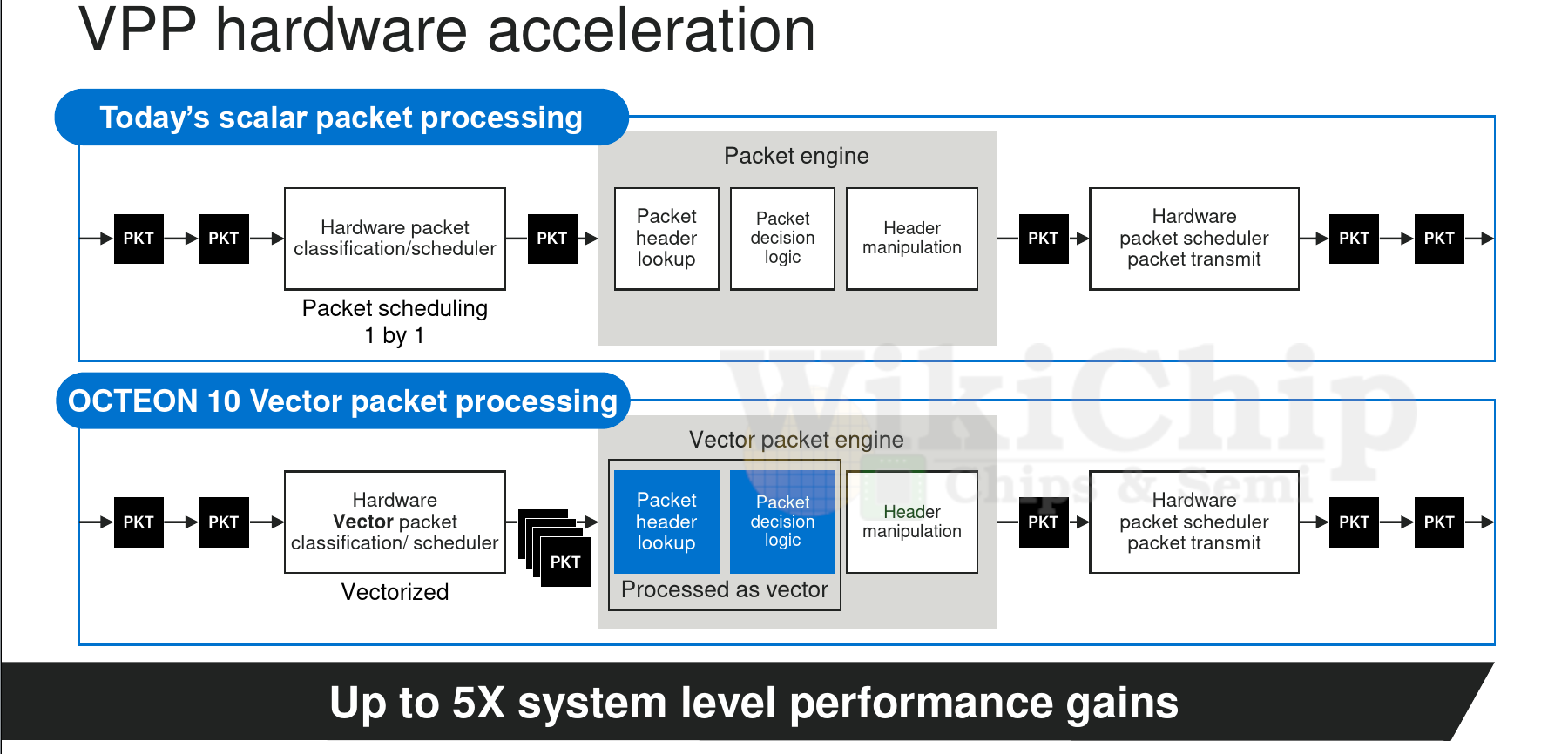

VPP

The vector packet processor (VPP) is another new feature that Marvell added to the Octeon 10. Here they took their standard packet processing pipeline and modified it to support vectorized packets. And this is exactly what it sounds like. They modified the front end of the pipeline such that it could group packets together into a vector. It’s now possible to group a set of packets based on some classification (e.g., a certain header). What this allows you to do is perform a single operation on all the packets at once (e.g, a packet header lookup) and then performing a single decision logic on all the packets in the vector at once instead of doing this individually on each packet.

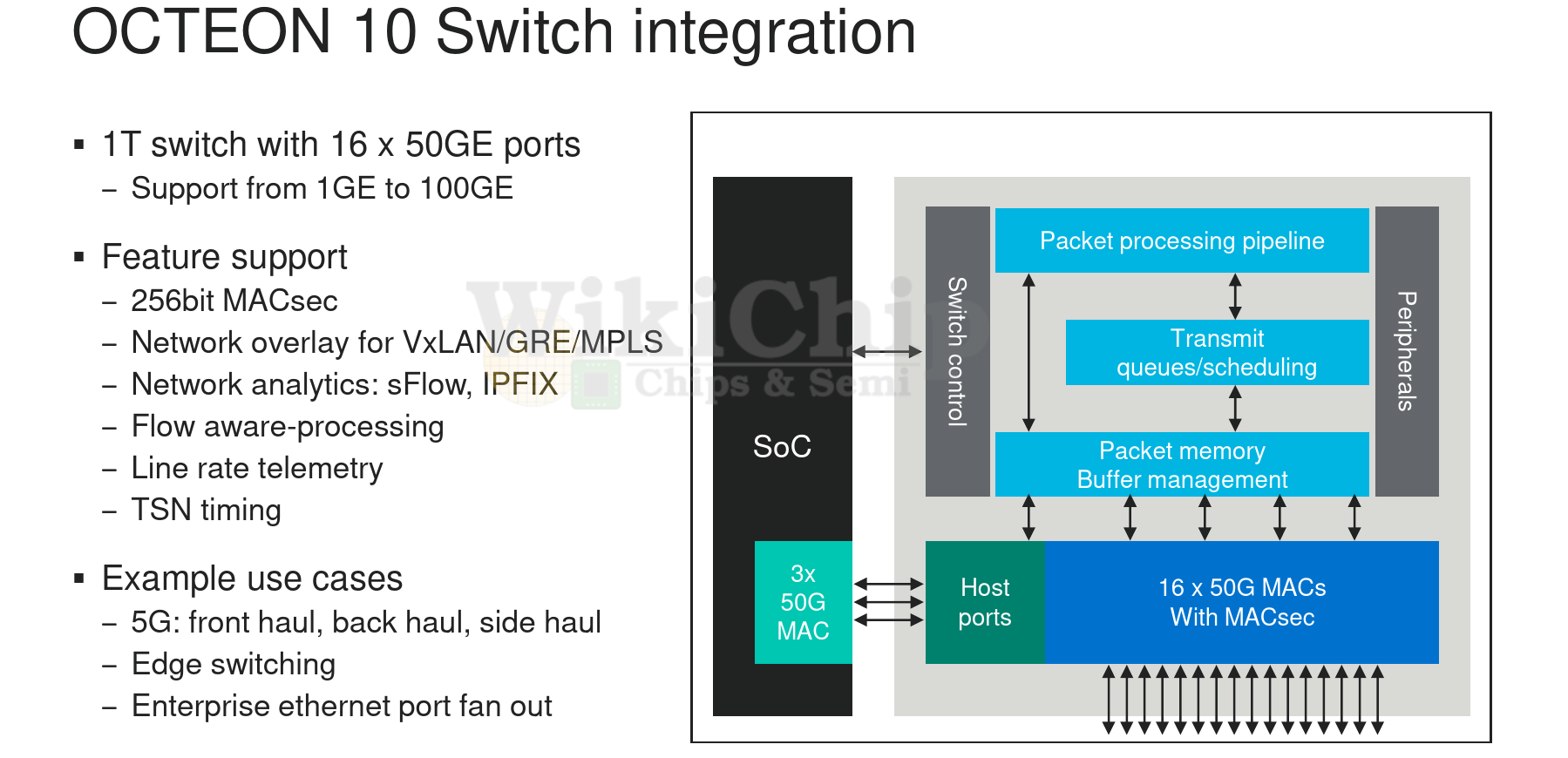

Switch Integration

On the Octeon 10 Marvell also integrated a 1 Terabit switch. It is implemented as 16 ports of 50 Gigabit Ethernet ports and these are configurable (e.g., anywhere from 1G and all the way to 100GE by combining multiple SerDes). In addition to the usual features such as network overlay and analytics, Marvell added 256-bit MACsec onto the switch as well as time-sensitive networking support.

SKUs

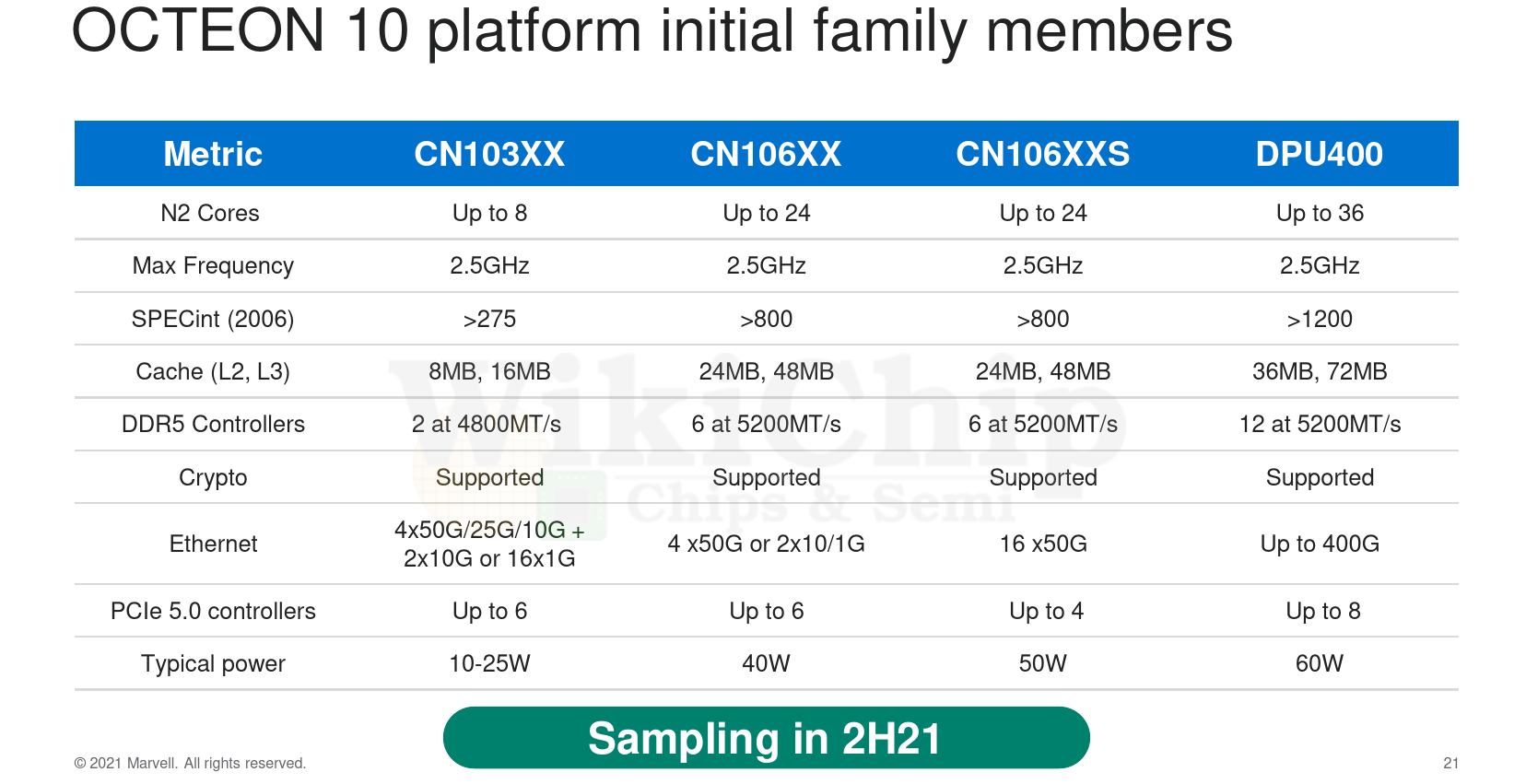

The initial members of the Octeon 10 family are shown below. They range from eight to 36 cores. The 103 is geared towards sub-25W edge devices and fanless applications. The 106 is the mainstream SKU with 24 Neoverse N2 cores and 6 DDR5 controllers. Marvell says that the 106 and 106S (S for switch) are already at TSMC with silicon coming back this quarter. They expect to start sampling by the end of the third quarter or early fourth quarter. The highest performance part will have 36 cores along with 12 DDR5 controllers and eight PCIe Gen 5 controllers. The DPU400 offers up to the full 400 Gigabit Ethernet connectivity for data center applications. Despite all that integration, these chips still have very attractive power ratings of around 60 W.

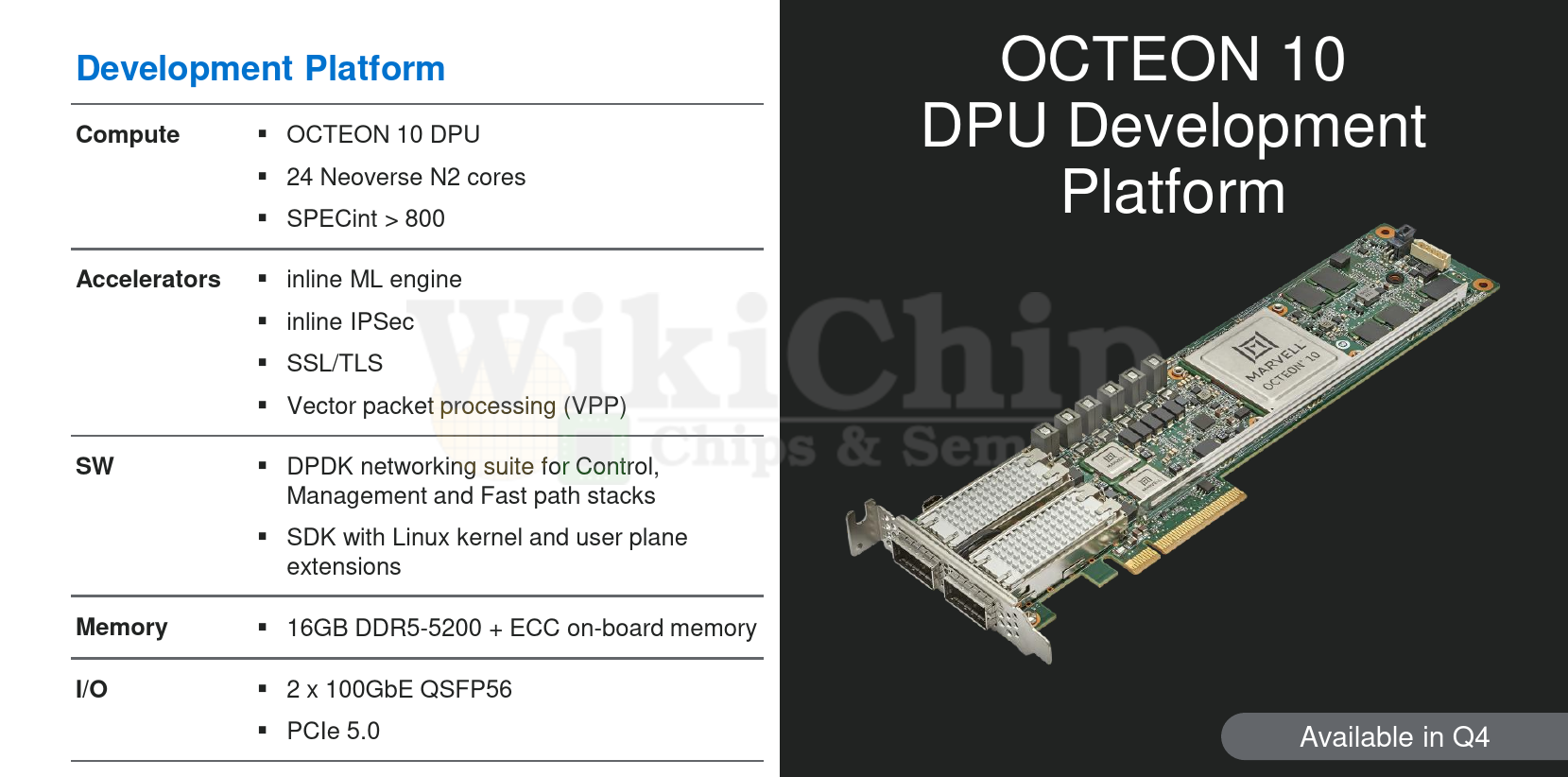

Development Platform

There is an application development platform for that Octeon 10 which Marvell is currently working on. It is offered in a PCIe card form factor. This would be suitable for things such as enterprise and cloud applications. The first version of this card will have 24 Neoverse N2 cores and configured as 2×100 GbE. The development platform will be available to customers by the end of the year.

–

Spotted an error? Help us fix it! Simply select the problematic text and press Ctrl+Enter to notify us.

–