Analog AI Startup Mythic To Compute And Scale In Flash

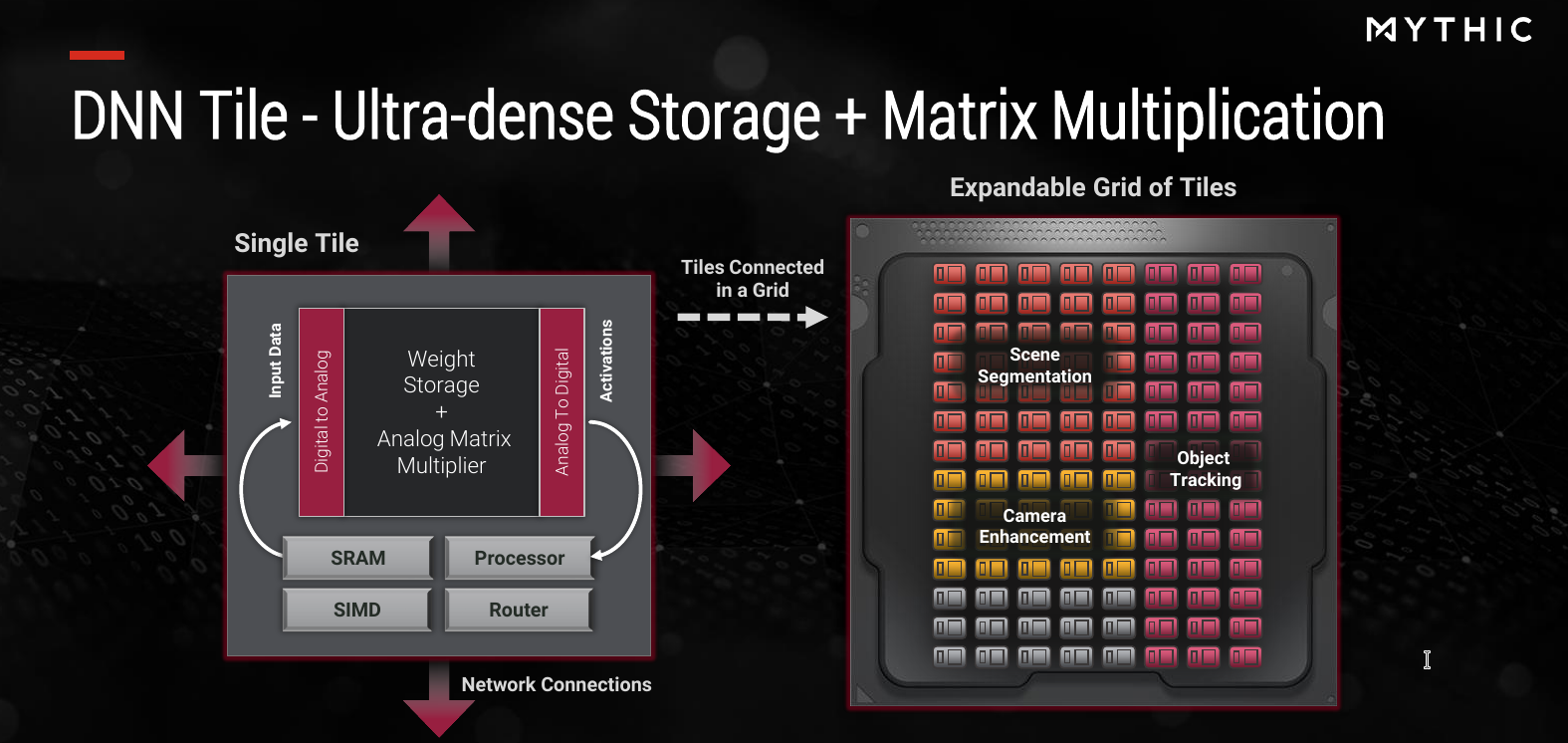

DNN Tiles

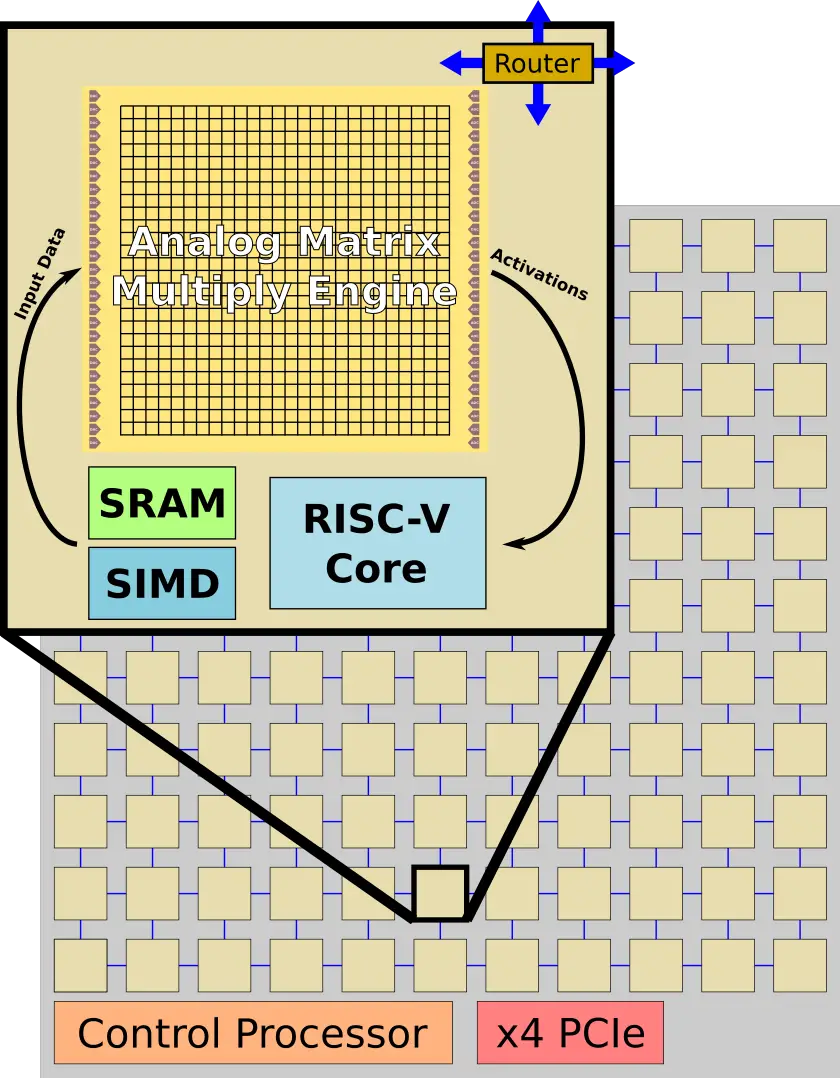

Each tile can do 1000 by 1000 matrix products and within a few microseconds. Along with the analog matrix engine, Mythic equipped each tile with conventional SRAM for temporary data storage, a RISC-V processor, and a fast vector DSP for additional non-MAC operations such as AveragePool and MaxPool. Those are designed to allow support for pooling, complex activation functions, tensor manipulation, and higher precision convolutions if needed.

Mythic uses RISC-V cores instead of licensing MIPS or Arm cores due to licensing costs and customization abilities.

Supporting up to 120 million parameters per chip means there are around 120 tiles in total or a grid of about 11 by 10 (the exact layout was not disclosed). The tiles incorporate a router which is used to interlink the neighboring tiles using a network-on-chip. In the future, we can probably expect Mythic to offer chips with varying grid sizes.

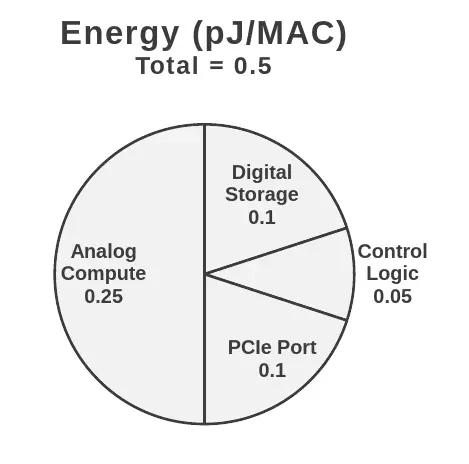

Power

The use of analog along with embedded flash is intended to substantially reduce the power consumption. Mythic is claiming that their IPUs perform at about 0.5 pJ/MAC operation. This value is given for a typical application such as ResNet-50 running at a batch size of 1 and have said that the value is fairly application-agnostic. By the way, this advertised value includes everything – the analog compute and storage, PCIe port and I/O, and the rest of the digital logic.

0.5 pJ/MAC gives you roughly 500 mW per teraMAC. In total, each chip can deliver a peak compute performance on the order of a few 10s TMAC/S and at around 5 Watts of power.

The numbers are impressive considering they also include the ADC/DAC. A number of key industry individuals, including Bill Dally from Nvidia, have been fairly vocal about analog compute. The biggest criticism is that the conversion from/to analog will consume much of the power efficiency. We got to ask about it at the AI Hardware Summit. “I would say the answer [to if the ADC/DAC dominate the power consumption] is yes – if you use conventional DAC/ADC designs. One of the biggest secret sauce at Mythic is the very unique architectures we designed for those two components that let us pack 10s of 1000s of them on-chip and have a whole chip run at just a couple Watts of power,†Henry said.

Initial Products

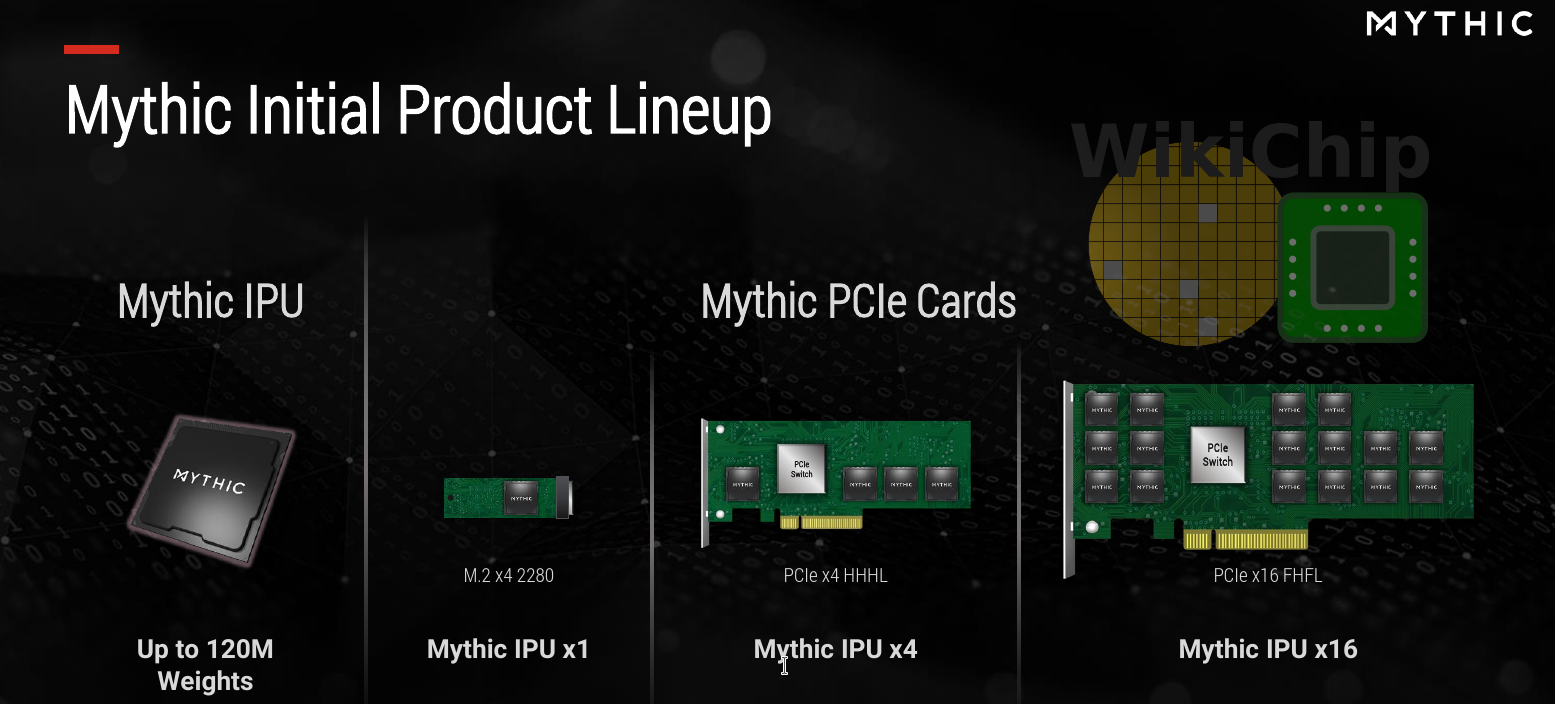

Mythic is planning a wide range of products. But having the entire model on-chip does have its limitations not found in classical architectures such as an upper bound on the model size. With a more traditional approach that uses a large pool of system memory, there is no real limit on the model size, though the bigger the model the more data movement is required and ultimately the lower the performance. To address this, Mythic IPUs can be stitched together in order to support massive models.

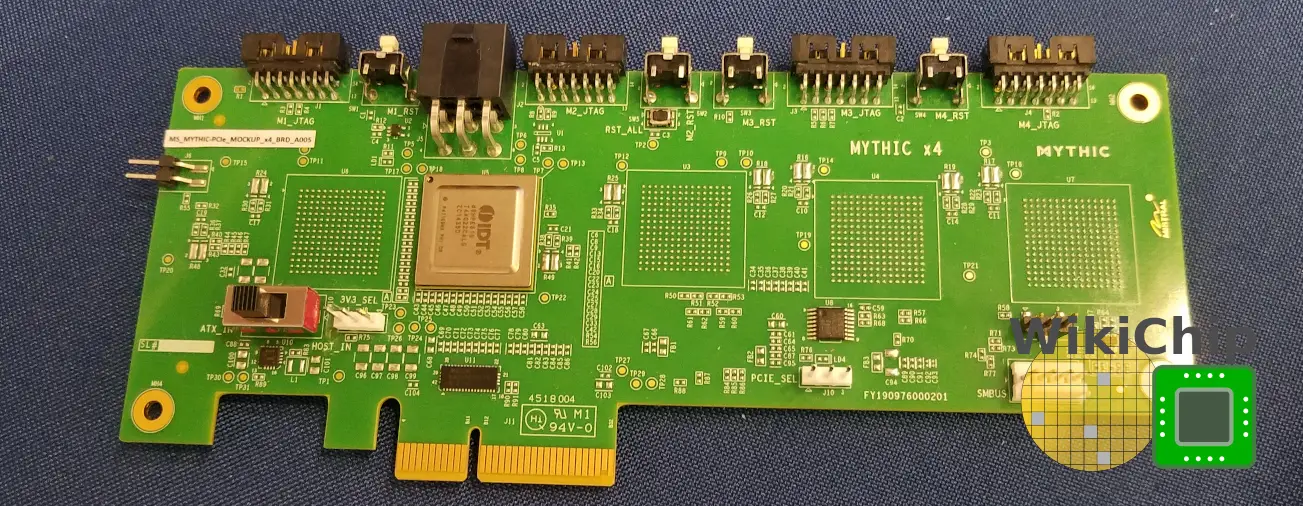

The simplest product is the Mythic IPU x1 which is a single IPU M.2 PCIe x4 inference card, very similar to Intel’s NNP I-1000. We were able to check out some of the early reference boards awaiting the IPUs.

Mythic also plans a number of large boards. The Mythic IPU x4 will offer four IPUs using an HHHL (half-height, half-length) card.

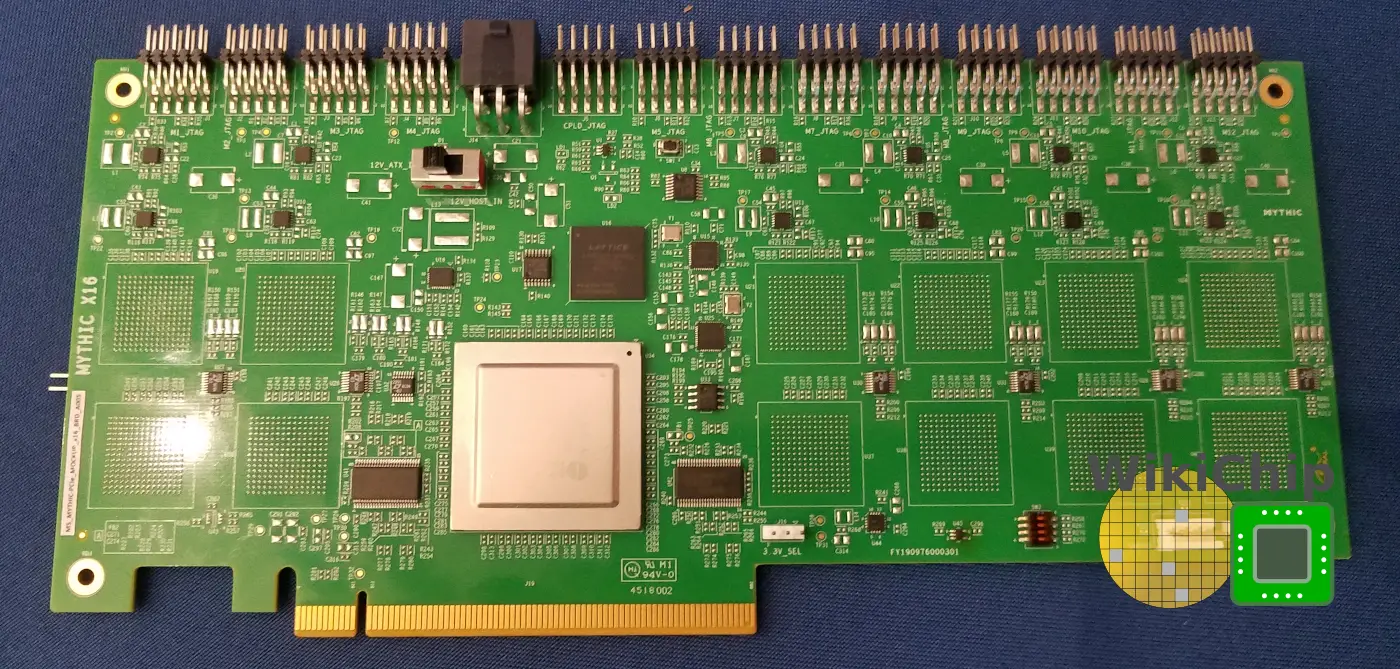

Finally, there is also the Mythic IPU x16 which is FHFL (full-height, full-length) version with 16 IPUs. This one should be able to offer capacity for models of up to around 1.5 billion parameters or alternatively a number of smaller, but still very large models. The large capacity is important due to the inability to reprogram the chip quickly so applications should remain fixed.

“I am happy to say that we have passed the R&D phase and we are actually in the process of bringing up engineering samples, “ Henry said. Since the company intends to start shipping by the end of the year, we expect more news in the coming months.

–

Spotted an error? Help us fix it! Simply select the problematic text and press Ctrl+Enter to notify us.

–