Groq Tensor Streaming Processor Delivers 1 PetaOPS of Compute

High-profile AI startup Groq is exiting stealth mode and has started talking – and the numbers being disclosed are fascinating. Groq is an AI hardware startup with roots in the engineering team behind Google’s original TPU. Founded in 2016, the company raised over $62 million.

Tensor Streaming Architecture: A Deterministic Approach

WikiChip got to sit down and talk with Groq about its architecture. Groq’s new architecture is the Tensor Streaming Architecture (TSA); a very different architecture from most other startups and existing processors. It’s designed to be a powerful single-threaded streaming processor equipped with an instruction set designed specifically to take advantage of tensor manipulation and tensor movements that allow machine learning models to be executed more efficiently. The unique aspect of the architecture is the interactions between the execution units, memory, and other execution units.

Groq’s magic is not only in the hardware but also in the software. In fact, it was the compiler that came first, not the prototype hardware architecture. Software-defined hardware plays a big role here. Groq’s software compiles the tensor flow models or other deep learning models into independent instruction streams that get highly coordinated and orchestrated ahead of time. The orchestration comes from the compiler. It determines and plans the entire execution ahead of time, enabling very deterministic compute. “That determinism comes from the fact that our compiler statically schedules all the instruction units. This allows us to eliminate the need for any aggressive speculation to expose instruction-level parallelism. There are no branch target buffers or caching agents on the chip,” explained Dennis Abts, Groq’s Chief Architect. Instead of making a smaller chip, Groq is going after maximizing the performance, using all the freed-up silicon to add more memory and execution blocks.

A big benefit of its deterministic architecture is the elimination of tail latency which is a result of synchronization. In a more traditional multi-core design the limiter of performance and scaling are those very few last responses that stall the synchronization event. In other words, you are bottlenecked by the very last thing that is participating in that synchronization event. For Groq’s TSP, it’s a zero-overhead synchronization. Deterministic behavior has other benefits. In safety-critical applications, predictable performance from consecutive inferences is crucial. Likewise, in the data center, Groq argues that managing the power and runtime power interactions that come with modern chipsets such as dynamic frequency scaling and other aggressive power management techniques that introduce complexity is complicated. With its TSP, the execution and power behavior is always the same and is pre-determined at compile-time. “One of the things that the deterministic execution buys us is that we are able to, at compile-time, know exactly the performance of that model – down to the clock cycle. That performance is very predictable and very repeatable. So we avoid the complex hardware speculation and aggressive speculation techniques to be able to expose more ILP. It’s a very structured and much simpler design,” said Abts.

There are some interesting challenges with the TSP. Edge inference often involves a set of tasks – not something that can be done simultaneously on the TSP. Determinism comes from its single-thread streaming approach. Depending on the active input, they simply swap the new data in and out as needed. It’s possible that the deterministic nature of the chip largely compensates for this shortcoming.

Though the architecture can do both, its current chip is designed for inference intended for mass deployment in everything from the edge to the data center. WikiChip was shown a die shot of the chip, and though we can’t publish it just yet, a simplified floorplan is shown below. The chip itself has a lot of arithmetic units. There’s also an abundance of on-die memory enabling very high bandwidth (tens of TBs per second) designed to keep the arithmetic units fed and the data paths busy. The on-chip memory is viewed as a large globally-shared scratchpad memory that everyone has access to. This is different from the more traditional manycore approach which partitioned the memory into smaller individual private memory slices. All current work is kept resident in the on-chip memory. To that end, the current chip is capable of 1 petaOPS of compute. Note that the arithmetic units incorporate both integer units and floating-point. This is a rather important design choice by Groq – instead of going with just integer or floating-point like most of the other neural processors out there, Groq includes both. It claims this allows customers to more easily build their models and plan their AI roadmaps around. Thanks to its floating-point units, it’s also capable of up to 250 trillion floating-point operations per second (250 teraFLOPS).

Currently, Groq is only talking about a single chip configuration. The chip is equipped with a high-bandwidths inter-chip interface designed to support scaling-out to bigger models and being able to take advantage of model-level parallelism across multiple chips.

The company’s first chip is working as desired and they are going to production with an A0 silicon. “We had power-on the first day, programs were running on the first week, and we were sampling across partners within six weeks, ” said Michelle Tomasko, VP of Engineering at Groq. “This is something we are particularly proud of. It’s a validation of the execution of our team,” Tomasko added. Tomasko explained that part of their smooth development is also due to their deterministic architecture which allows them to reduce validation time quite a bit. A test case will always run the same way each time it’s executed without strange edge cases, long tail, or various complex race conditions or combinations of events affecting the behavior of the machine at runtime.

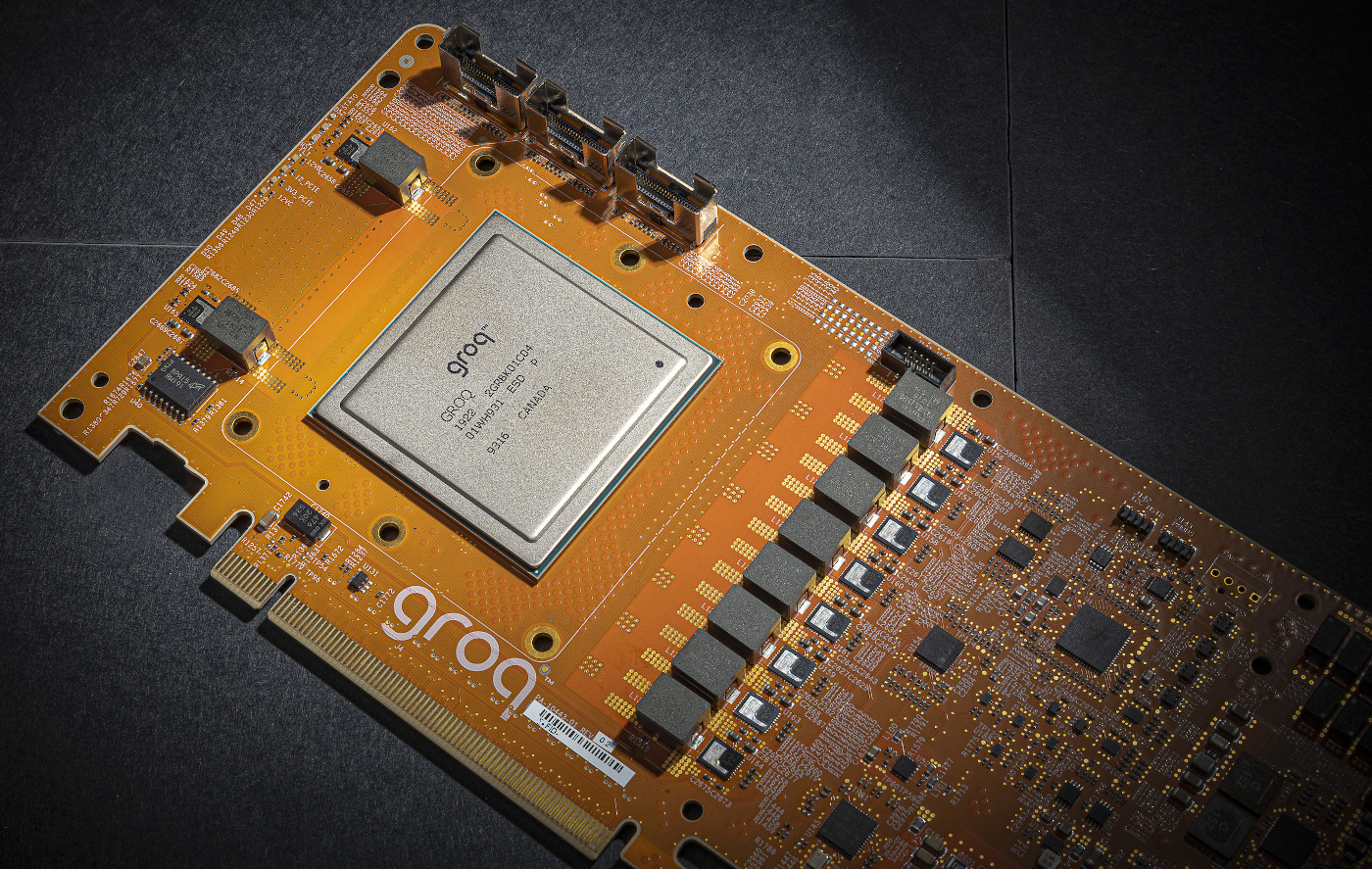

For the moment, Groq is sampling with customers using a PCIe accelerator card. In the future, though, it could expand into other types of platforms. Groq is expected to make further public disclosures in the coming months.

Related Stories

–

Spotted an error? Help us fix it! Simply select the problematic text and press Ctrl+Enter to notify us.

–