Mythic Rolls Out M1000-Series Analog AI Accelerators; Raises $70M Along The Way

As our readers would know, we’ve always been a big fan of novel architectures. It should come as no surprise that we had to revisit Mythic as their new M1000-series true analog accelerators go into production.

We first wrote about their in-flash memory analog compute architecture in 2019. Lots have happened since. Mythic has grown to over 110 employees with offices in Austin, TX and Redwood City, CA. Late last year, they unveiled their first series of AI analog accelerators – the M1000-series – along with their top chip. More recently they expanded that series with a second, more versatile, chip. In May, Mythic also announced it raised $70 million in Series C funding led by BlackRock and HPE. This puts the total amount raised to date at $165.2 million. Mythic says the new funding intends to accelerate plans for mass production, increase customer support, build out its software portfolio, and help develop its next-generation hardware platform.

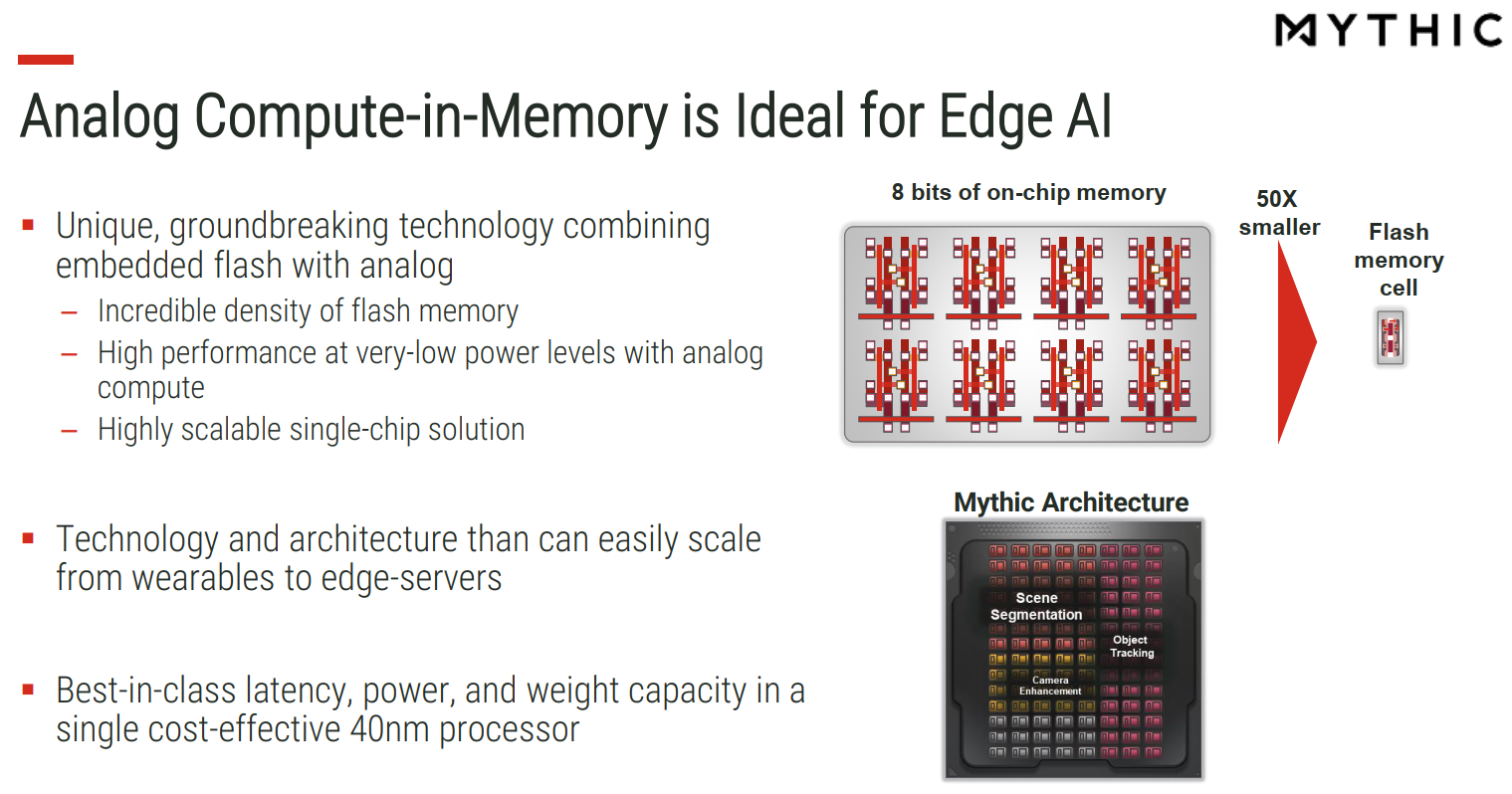

Analog Compute In-Memory

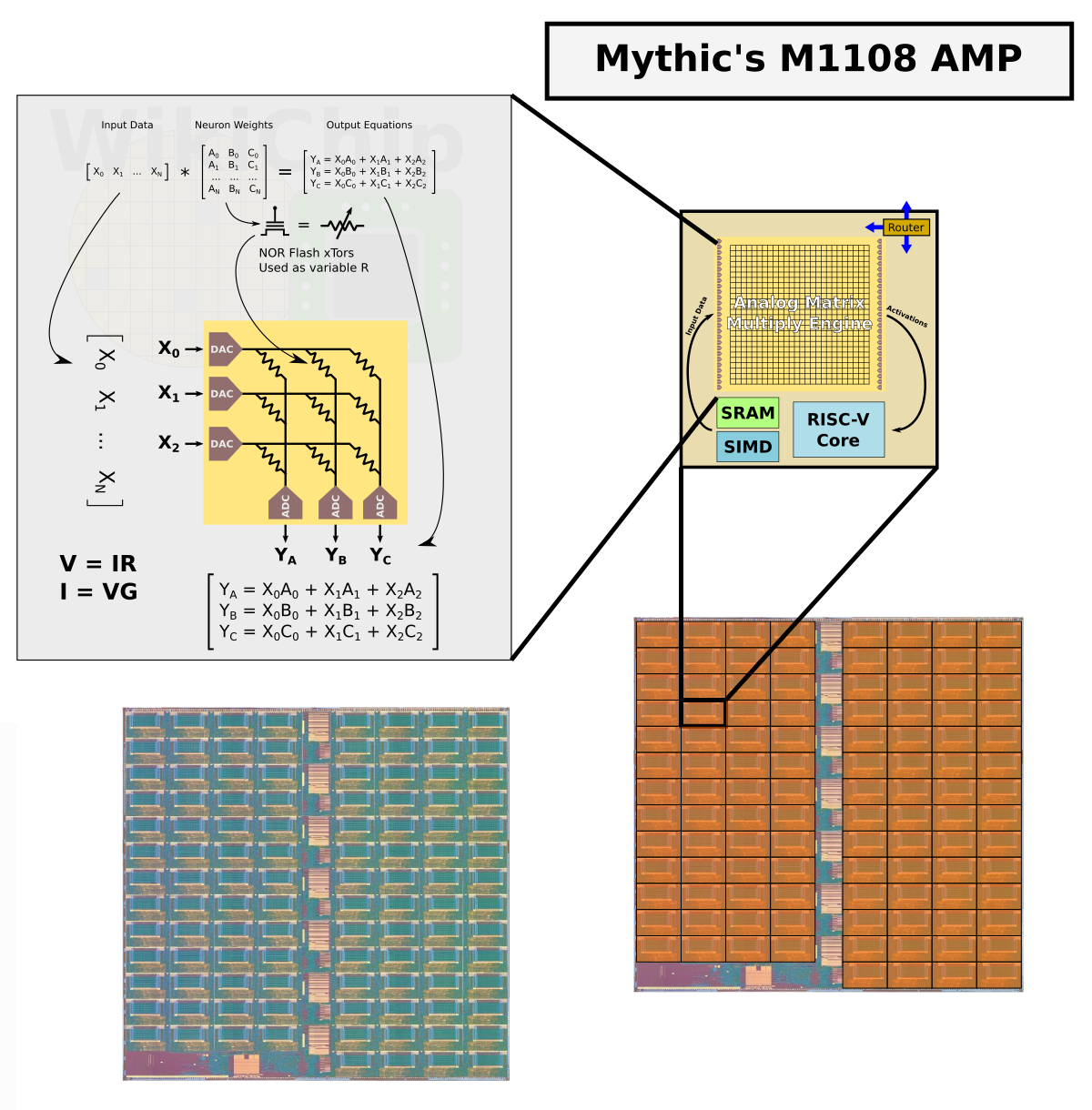

Mythic took a very different approach to accelerate AI workloads. We will only briefly talk about their architecture as we’ve already covered that more comprehensively before. As you know, the bulk of the computation takes place in the repeated matrix multiply operations. It also happens to be fairly memory intensive, shifting large amounts of data in and out. Addressing this, most accelerators typically rely on a very large amount of SRAM on-die. Alternatively, some choose to rely on external memory and go with a large amount of memory bandwidth either through a high number of memory channels along with conventional DDR4/LPDDR4x or a wider memory bus such as HBM2e.

Mythic, on the other hand, went with flash memory. A single flash cell can store multiple values and is therefore considerably denser than an equivalent 8-bit SRAM memory block. But the real advantage in their case comes with how they use that flash memory. By storing stationary weights in flash and using them directly acting as a variable resistor, they can take advantage of Ohm’s law to naturally perform matrix multiplication operations by applying a set of voltages across. This allows them to not only store the weights in dense memory but operate on those weights without actually “reading” them. The combination of using flash memory along with analog matrix multiply operations promises to bring a step-function improvement in energy efficiency and performance-per-cost.

M1000-Series Analog Matrix Processor (AMP)

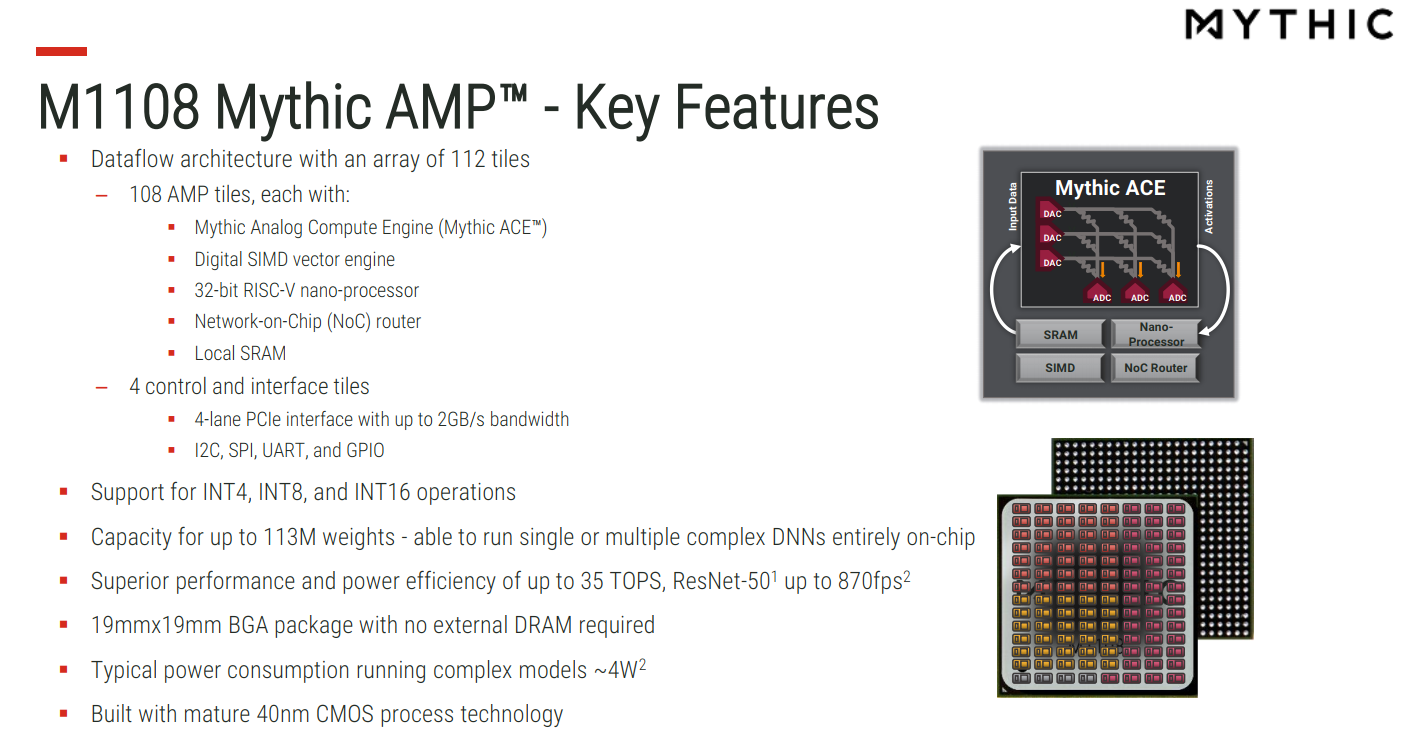

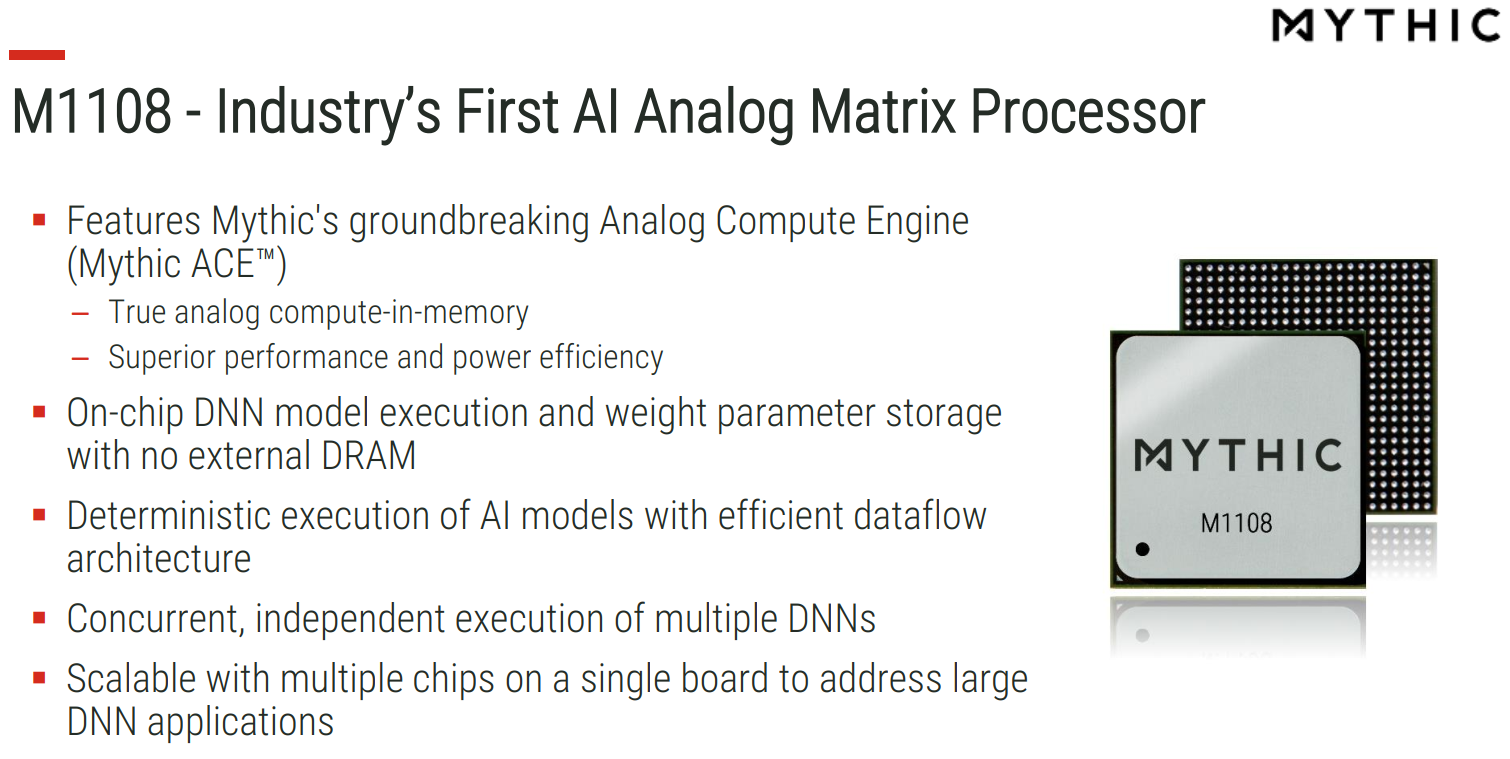

Mythic calls their neural processors the Analog Matrix Processors or AMP. The chip itself features a tiled architecture. Within each tile is the Mythic Analog Compute Engine (ACE), a digital SIMD vector engine, a 32b RISC-V nano-processor, a small amount of local SRAM, and the NoC router. There are a number of additional miscellaneous tiles for control and interfaces.

Mythic’s first product which launched last November is the M1108 AMP. This is the flagship model and the chip based on the largest configuration of tiles in this generation. The ‘108’ represents the 108 compute tiles on that die. There are also 4 control and interface tiles including x4 PCIe Gen2 lanes. Within each tile is 1024×1024 flash array which means a whole 1 MiB of memory per tile. For the M1108, this equates to 108 MiB of memory. The entire package is within 19×19 mm² BGA package and consumes just about 4 W – a fraction of the power consumed by their competitors.

108 MiB of storage capacity for weights is a lot. It’s a lot of memory even if the chip was on a leading-edge process; but in Mythic’s case, they are fabricating the chip on a mature and cost-effective 40-nanometer CMOS. Keep in mind that the target market for those chips is the billions of edge devices such as industrial applications (machine vision, autonomous drones, etc..), video surveillance, vision systems, and other low-power applications. Here, the models can fit entirely on-chip without any external memory. In fact, multiple models can usually fit in that space. For this reason, it’s actually a single-chip platform without any external memory used for typical use cases which can further reduce the BoM.

Mythic offers the M1108 AMP in a couple of ways. Customers can buy the chip itself and integrate it directly into their system however they see fit. Mythic also offers a PCIe evaluation kit as well as an M.2 card for customers that want to take a much simpler route.

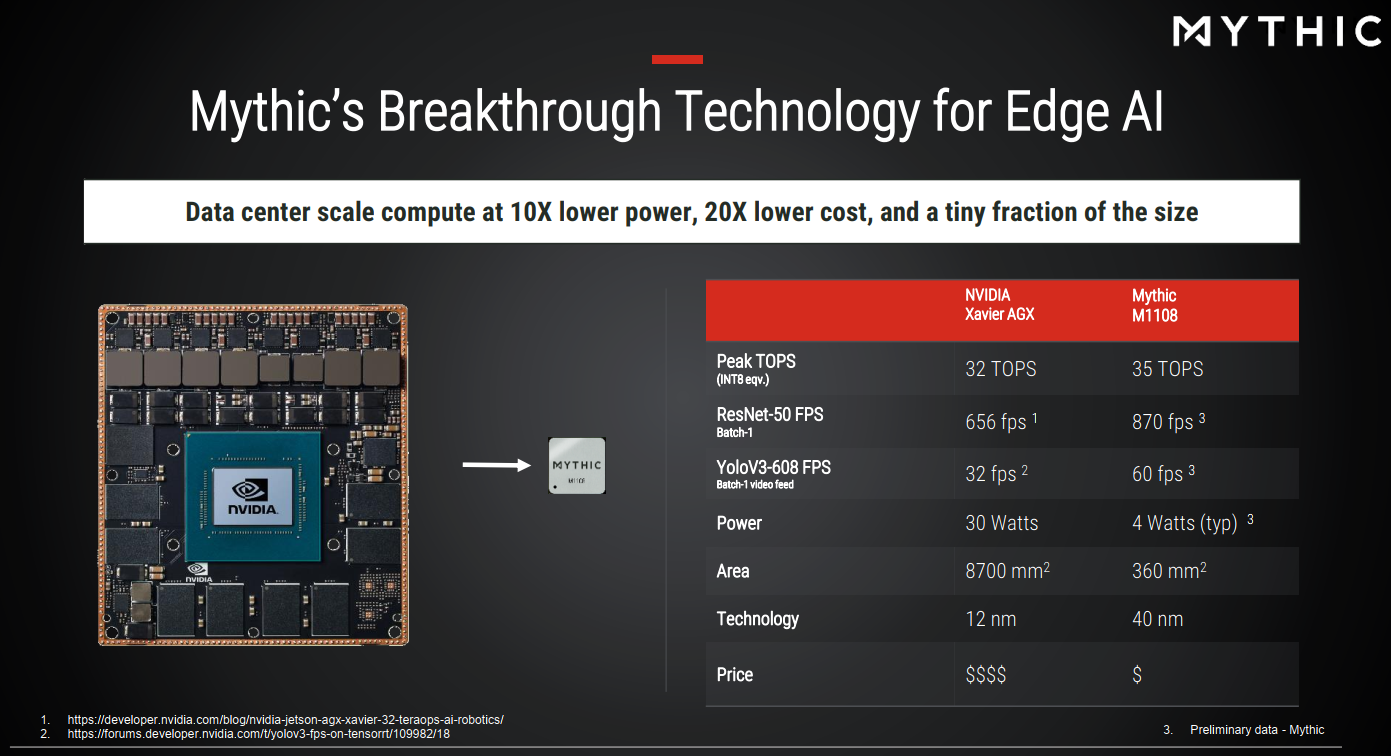

When comparing their M1108 to Nvidia Xavier AGX, Mythic claims superior performance at a 10th of the power – while keeping the platform size to a fraction (no need for the expensive external memory or complex power delivery). But perhaps the biggest difference is in the price which at the current prices is around 30x lower. Now, we do have to be careful with that comparison as it’s far from even. The Xavier SoC is loaded with many other features such as a programmable vision accelerator (PVA), stereo and optical flow engine, and of course, the CPU cores and GPU compute. And while you are paying a premium for those features, for the edge and embedded space where Mythic is targeting, much of this will go unused by many customers.

M1076 – Smaller form-factor

In early June Mythic introduced a second product in the series – the M1076 AMP. As the model number implies, this is a further cut-down version of the M1108 AMP with just 76 tiles. This product will likely be cheaper than the M1108 and Mythic thinks it will also be more popular. The rationale behind this version is the form factor. Whereas the original M1108 was sized for M.2 M key card, with physical dimensions of 22 mm x 80 mm. Mythic says that in the embedded space, there is strong interest in the even smaller A+E key variant which has the physical dimensions of just 22 mm by 30 mm. The original M1108 AMP was simply too big for that.

The M1076 supports up to 25 TOPS and because it’s got 32 fewer tiles, it operates at lower power – about 1 W less than the M1108 or 3 W power envelope.

One interesting aspect of the new M1076 AMP is the new multi-chip configuration they are offering and letting customers experiment with. Here Mythic is offering a PCIe card solution with up to 16 AMPs. At the largest configuration, a full card with 16 AMPs is maxed at around 75 W and can provide 400 TOPs of compute power along with a whopping 1.1875 GiB of on-die flash storage for close to 1.3 billion weights.

Both the M1108 and the M1076 along with the M.2 and PCIe card versions are available for customer evaluation.

–

Spotted an error? Help us fix it! Simply select the problematic text and press Ctrl+Enter to notify us.

–